As the project enters into its 27th month, releases for most technologies are already available and undergoing the last steps towards completing their integration.

To demonstrate the SAFEXPLAIN approach, project partners are working towards creating an open source demo mimicking an industrial case study but without the IP constraints that those industrial case studies usually entail. The open source demo highlights how project technologies are being integrated to show that project technologies not only make sense on their own, but they also work together to provide an end-to-end solution for designing and deploying safety-relevant systems based on AI that adhere to safety regulations and principles.

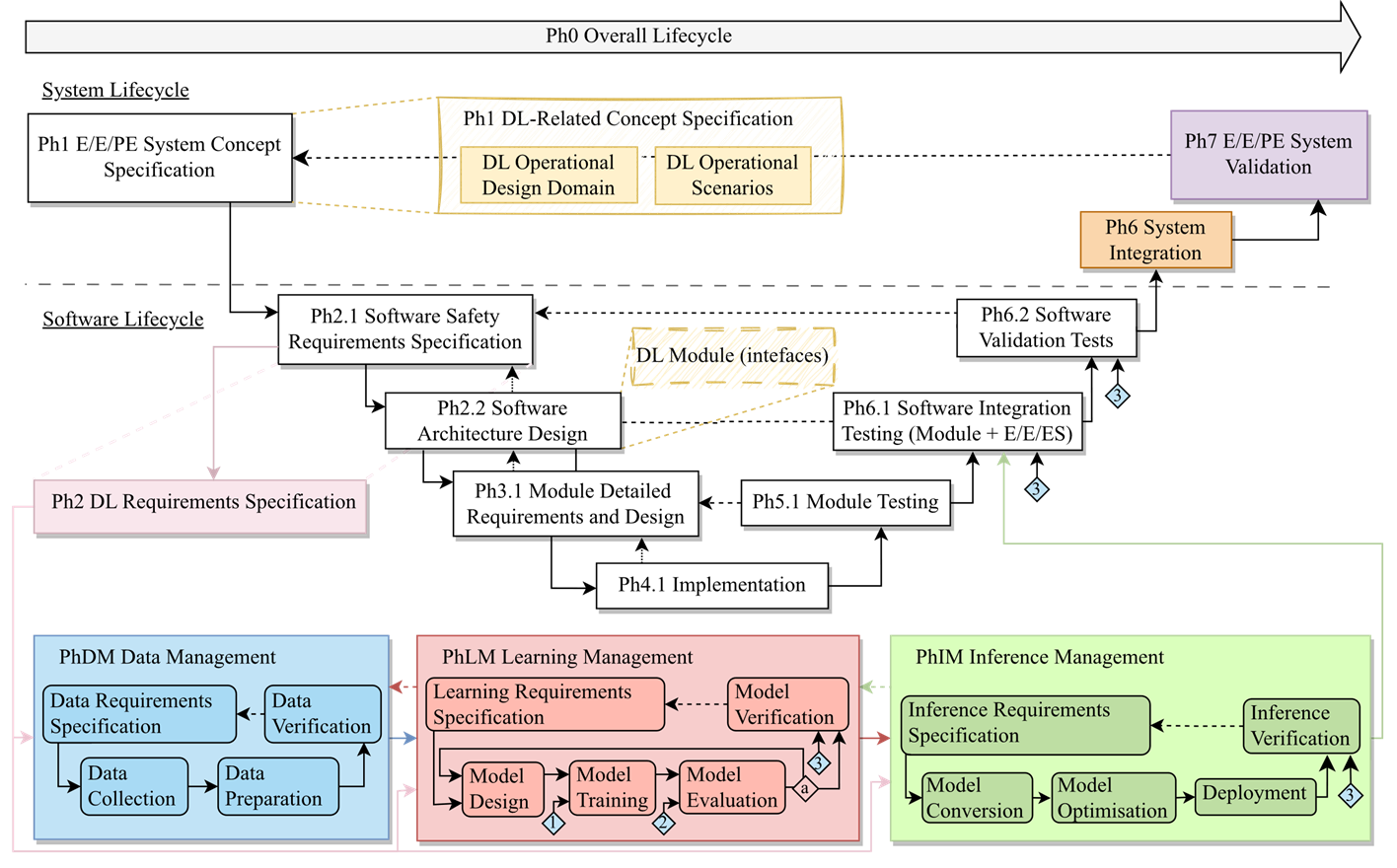

Key technologies for the open source demo development process include the so-called AI-Functional Safety Management (AI-FSM) methdology, which describes how the usual development process for non-AI-based systems must be updated to include AI software with safety requirements.

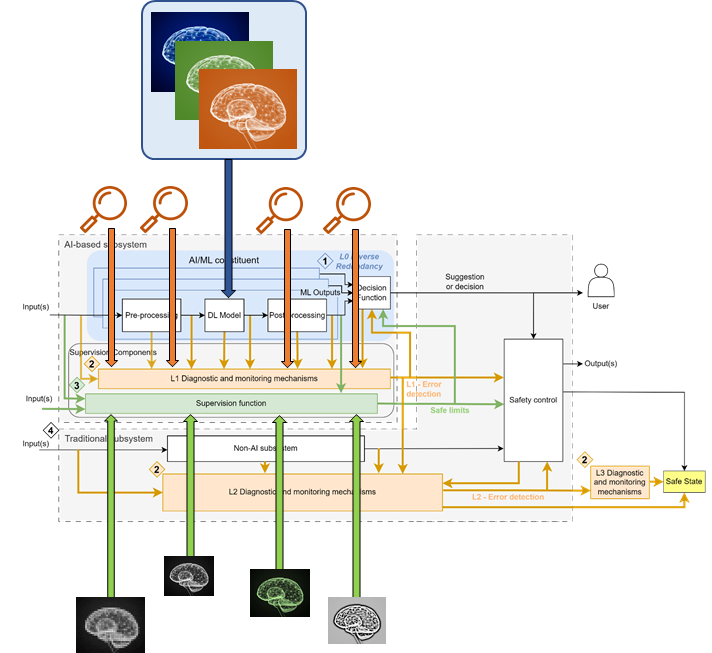

Along with this development process, explainable AI (XAI) technologies are deployed to make sure that AI models are designed, trained and validated with enough thoroughness, and safety patterns extended with AI components are proposed to include AI software in the final software architecture.

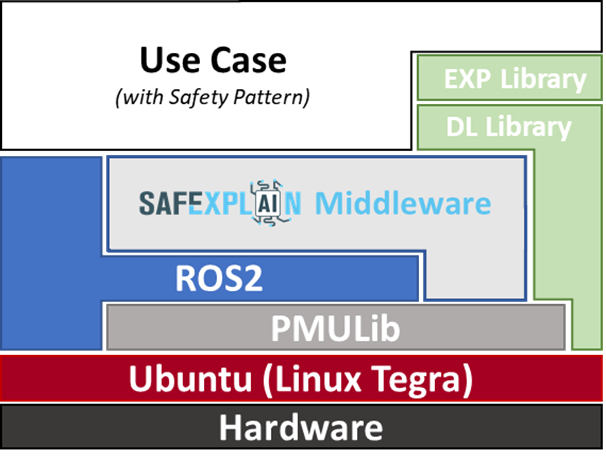

During operation, the open source demo will run upon an extended middleware supporting AI software components, as well as appropriate XAI components and AI diverse redundancy solutions that provide the AI-specific safety envelop needed to meet safety requirements also for AI software (Figure 3).