About us

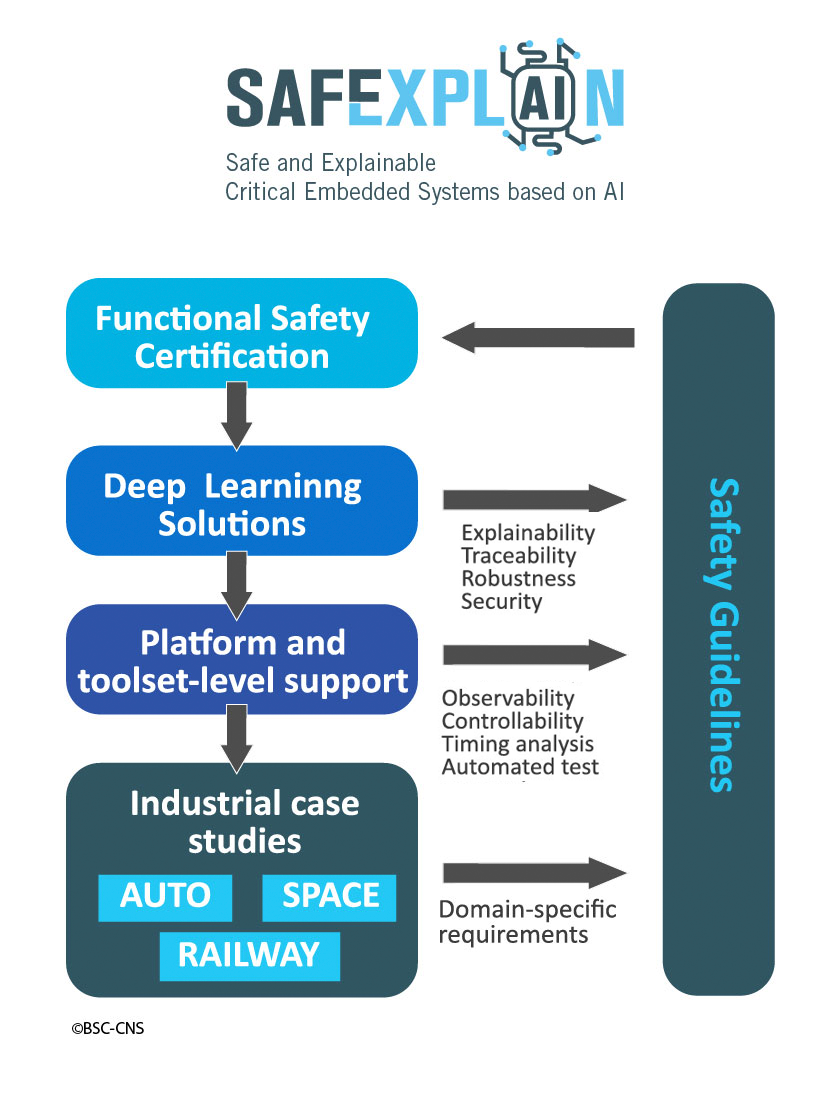

Deep Learning (DL) techniques are key for most future advanced software functions in Critical Autonomous AI-based Systems (CAIS) in cars, trains and satellites. Hence, those CAIS industries depend on their ability to design, implement, qualify, and certify DL-based software products under bounded effort/cost. There is a fundamental gap between Functional Safety (FUSA) requirements of CAIS and the nature of DL solutions needed to satisfy those requirements. The lack of transparency (mainly explainability and traceability), and the data-dependent and stochastic nature of DL software clash against the need for deterministic, verifiable and pass/fail test based software solutions for CAIS.

SAFEXPLAIN tackles this challenge by providing a novel and flexible approach to allow the certification – hence adoption – of DL-based solutions in CAIS by

- Architecting transparent DL solutions that allow explaining why they satisfy FUSA requirements, with end-to-end traceability, with specific approaches to explain whether predictions can be trusted, and with strategies to reach (and prove) correct operation, in accordance with certification standards.

- Devising alternative and increasingly complex FUSA design safety patterns for different DL usage levels (i.e. with varying safety requirements) that will allow using DL in any CAIS functionality, for varying levels of criticality and fault tolerance.

The Vision

- Provide scientific and technical solutions to the European industry that enable fully-autonomous Critical Systems (e.g. cars, trains, satellites) with certified and economically viable solutions

- Bring increased efficiency of Critical Autonomous Systems because safe DL solutions reduce CO2 emissions (up to 80% for different types of vehicles according to informed predictions)

- Allow European Critical Autonomous Systems industry benefit from DL functionalities and remain competitive in the future, while still being trustworthy

Project Description

| Project Name | Safe and Explainable Critical Embedded System based on AI |

| Acronym | SAFEXPLAIN |

| Grant Agreement ID | 101069595 |

| Project Coordinator | Barcelona Supercomputing Center |

| Start Date | 1 October 2022 |

| End Date | 30 September 2025 |

| Number of Partners | 6 |

| EU contribution | € 3 891 875 |