The use of Artificial Intelligence (AI) has become widespread across multiple domains, including automotive, railway, and space—three areas of focus for the SAFEXPLAIN project. While AI offers many opportunites, safety-related applications raise significant concers, as errors or system malfuctions could result in injuries or even fatalities.

For such safety-critical applications, traditional functional safety projects typically rely on safety patterns to address the requirements imposed by functional safety standards. However, the integration of AI introduces new challenges that require adapting traditional employed safety patterns or even designing novel approaches.

To address these challenges, Ikerlan has analyzed strategies and developed specific solutions for implementing safety patterns in systems that incorporate AI components. Ikerlan has proposed a series of safety patterns with progressively stringent requirements and constraints, depending on the level of AI usage within the safety-critical system.

A Reference Safety Architecture for AI Integration

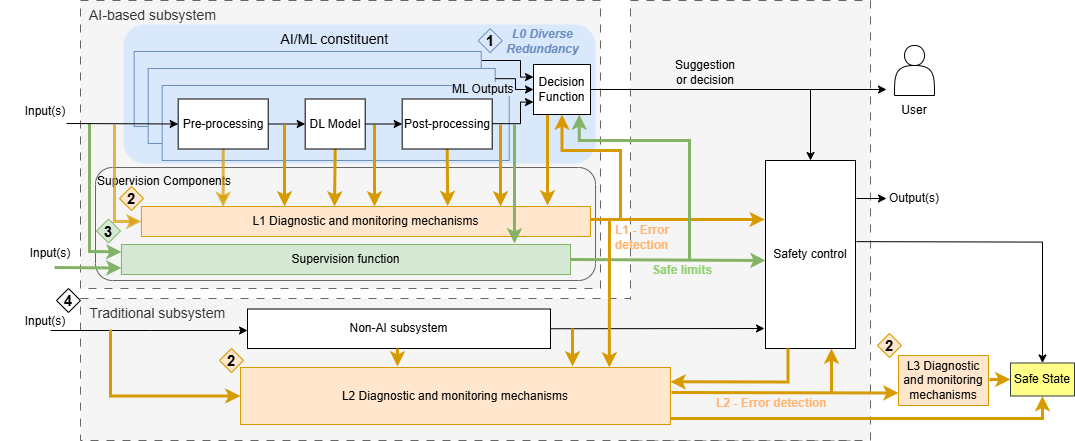

At the core of Ikerlan’s approach is the reference safety architecture pattern, illustrated in Figure 1, along with a collection of safety elements to ensure compliance with safety requirements. This architecture serves as a baseline for integrating various safety patterns and building systems while maintaining adherence to functional safety standards. The architecture must be adapted to meet the specific requirements of each safety-critical application.

Figure 1: SAFEXPLAIN Reference safety architecture pattern for AI-based safety critical systems

The reference safety architecture pattern incorporates four key safety mechanisms:

- Diverse redundancy (Rhombus 1). It focuses on redundancy within the AI component, such as employing diverse models or diverse input sensors, while excluding non-AI components.

- Supervision components:

- Diagnostics and monitoring (Rhombus 2). Diagnostics and monitoring mechanisms applied at various hierarchical levels (L0 related to redundancy, L1 diagnostics for detecting anomalies on AI components, L2 or platform level-diagnostics, such as memory self-tests…).

- Supervision Functions (Rhombus 3). Supervision functions that check both the input and the output of the AI component, identifying unsafe situations and serving as a safe envelope.

- Traditional Subsystems (Rhombus 4): It includes traditional subsystem including all the AI complementary functions as well as the fallback subsystem working as a safe back-up function in case of detecting a functional safety problem.

Looking Ahead

By introducing this comprehensive reference safety architecture, Ikerlan aims to pave the way for the safe integration of AI into safety-critical systems across diverse industries. This set of safety mechanisms reduces the time to market not only for safety-related systems but also for systems with reliability requirements. As AI continues to evolve, ensuring its safe and reliable use in critical domains will remain a top priority.