News

SAFEEXPLAIN final deliverables now available!

The project's final 11 deliverables are now available. Access the project's final results. The SAFEXPLAIN deliverables provide key details about the project and its final outcomes. The following deliverables have been completed for public dissemination and approved by...

PRESS RELEASE: SAFEXPLAIN delivers final results: Trustworthy AI Framework, tools and validated case studies

After three years of research and collaboration, the EU-funded SAFEXPLAIN project, coordinated by the Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS) has released its final results: a complete, validated and independently assessed...

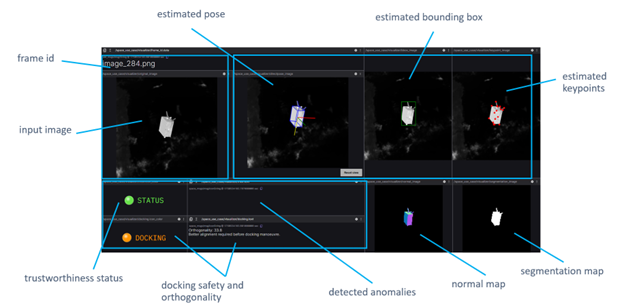

Final Space Demonstrator Results

The space case study takes place in a scenario with high relevance for the space industry, a spacecraft agent navigating towards another satellite target and attempting a docking manoeuvre. This is a typical situation of an In-Orbit Servicing mission. The case study...

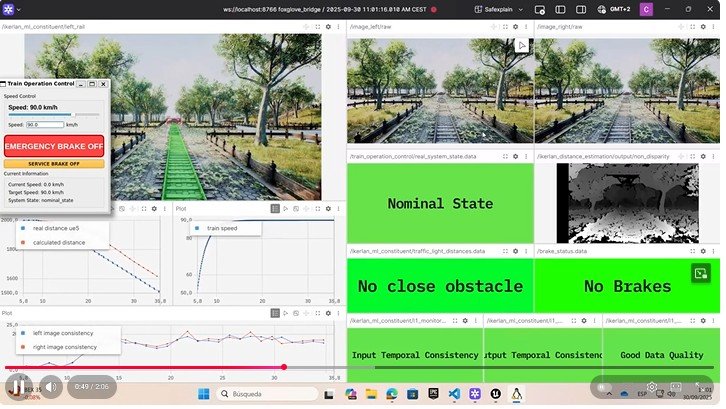

Final Railway Demonstrator Results

The SAFEXPLAIN railway demo comprises an Automatic Train Operation (ATO) system that detects obstacles in the railway and estimates their distance by the assistance of cameras (i.e., right / left cameras). It is based on ROS2 architecture and consists of four main...

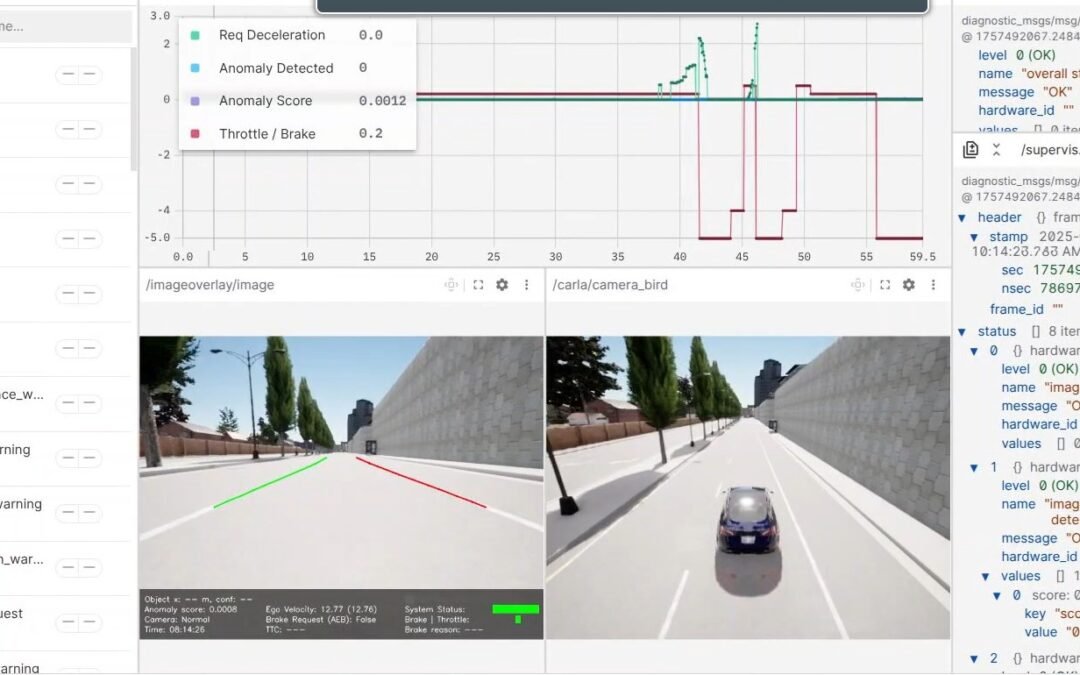

Final Automotive Demonstrator Results

The final phase of the automotive case study focused on building and validating a ROS2-based demonstrator that implements safe and explainable pedestrian emergency braking. Our architecture integrates AI perception modules (YOLOS-Tiny pedestrian detector, lane...

Trustworthy AI in Safety Critical Systems Event Signals End of SAFEXPLAIN Project but Results Live On

On 23 September 2025, members of the SAFEXPLAIN consortium joined forces with fellow European projects ULTIMATE and EdgeAI-Trust for the event “Trustworthy AI in Safety-Critical Systems: Overcoming Adoption Barriers.” The gathering brought together experts from...

Strong representation of SAFEXPLAIN in DSD-SEAA

SAFEXPLAIN partners shared key projects results at the 2025 28th Euromicro Conference Series on Digital System Design (DSD). Two papers were accepted to the conference proceedings and presented on 10 and 11 September 2025 by Francisco J. Cazorla from the Barcelona...

Strengthening Europe’s Trustworthy AI Ecosystem Through Strategic Cross-Project Collaboration

The SAFEXPLAIN project has worked to advance Europe’s vision for trustworthy and human-centred AI through its strong collaboration with key European AI initiatives and fellow research projects. Over its lifetime, the project has actively contributed to strengthening...

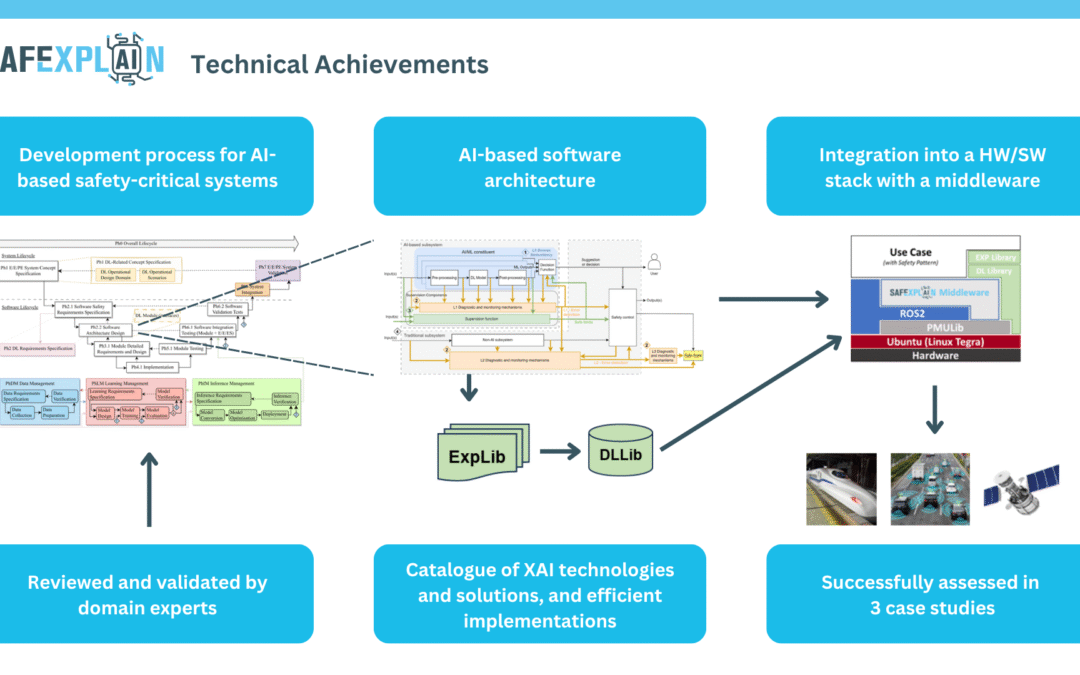

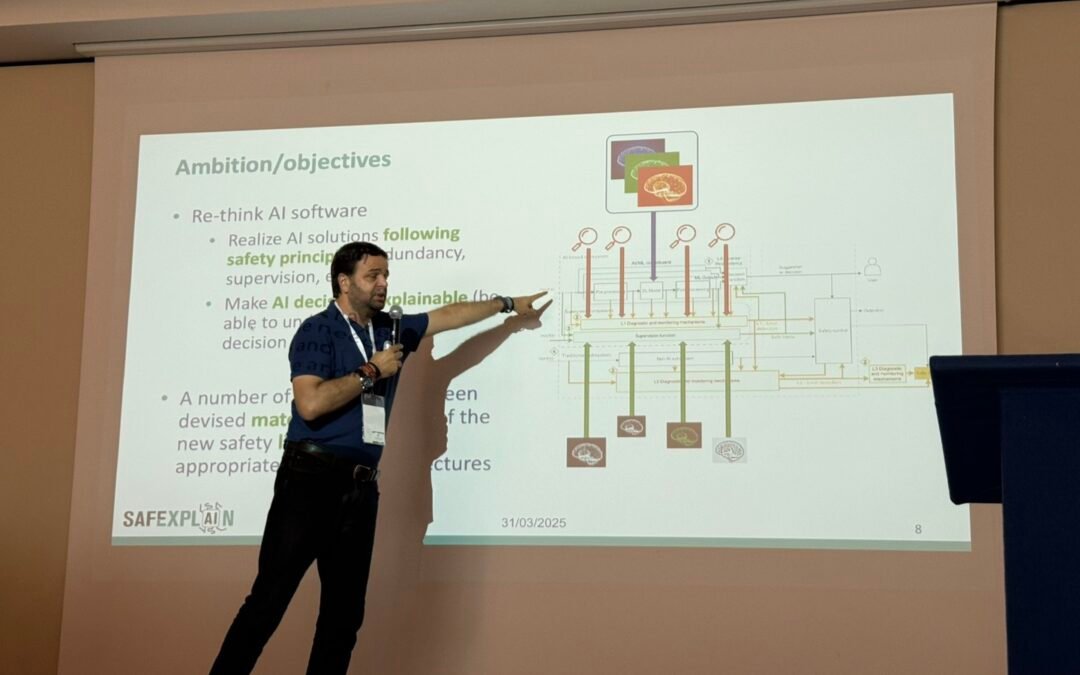

SAFEXPLAIN: Outstanding scientific solutions and practical application

SAFEXPLAIN’s success can be understood as a combination of outstanding scientific results and the vision to put them together to solve fundamental industrial challenges to make AI-based systems trustworthy. The project's results and network of interested parties...

Safety for AI-Based Systems

As part of SAFEXPLAIN, Exida has contributed a methodology related to a verification and validation (V&V) strategy of AI-based components in safety-critical systems. The approach combines the two standards ISO 21448 (also known as SOTIF) and ISO 26262 to address...