SAFEXPLAIN: From Vision to Reality

AI Robustness & Safety

Explainable AI

Compliance & Standards

Safety Critical Applications

THE CHALLENGE: SAFE AI-BASED CRITICAL SYSTEMS

- Today’s AI allows advanced functions to run on high performance machines, but its “black‑box” decision‑making is still a challenge for automotive, rail, space and other safety‑critical applications where failure or malfunction may result in severe harm.

- Machine- and deep‑learning solutions running on high‑performance hardware enable true autonomy, but until they become explainable, traceable and verifiable, they can’t be trusted in safety-critical systems.

- Each sector enforces its own rigorous safety standards to ensure the technology used is safe (Space- ECSS, Automotive- ISO26262/ ISO21448/ ISO8800, Rail-EN 50126/8), and AI must also meet these functional safety requirements.

MAKING CERTIFIABLE AI A REALITY

Our next-generation open software platform is designed to make AI explainable, and to make systems where AI is integrated compliant with safety standards. This technology bridges the gap between cutting-edge AI capabilities and the rigorous demands for safety-crtical environments. By joining experts on AI robustness, explainable AI, functional safety and system design, and testing their solutions in safety critical applications in space, automotive and rail domains, we’re making sure we’re contribuiting to trustworthy and reliable AI.

Key activities:

SAFEXPLAIN is enabling the use of AI in safety-critical system by closing the gap between AI capabilities and functional safety requirements.

See SAFEXPLAIN technology in action

CORE DEMO

The Core Demo is built on a flexible skeleton of replaceable building blocks for Interference, Supervision or Diagnoistic components that allow it to be adapted to different secnarios. Full domain-specific demos are available in the technologies page.

SPACE

Mission autonomy and AI to enable fully autonomous operations during space missions

Specific activities: Identify the target, estimate its pose, and monitor the agent position, to signal potential drifts, sensor faults, etc

Use of AI: Decision ensemble

AUTOMOTIVE

Advanced methods and procedures to enable self-driving carrs to accurately detect road users and predict their trajectory

Specific activities: Validate the system’s capacity to detect pedestrians, issue warnings, and perform emergency braking

Use of AI: Decision Function (mainly visualization oriented)

BoF workshop at Future Ready Solutions Event — Trustworthy AI: main innovations and future challenges

Project coordinator Jaume Abella from the Barelona Supercomputing Center represented the project at the Birds of a Feather session, “TrustworthyAI Cluster: Main innovations and future challenges” together with cluster siblings EVENFLOW, TALON and ULTIMATE.

BSC Webinar Demonstrates Interoperability of SAFEXPLAIN Platform Tech & Tools

The third webinar in the SAFEXPLAIN webinar series will share the novative infrastructure behind the AI-FSM and XAI methodologies. Participants will gain insights into the integration of the proposed solutions and how they are designed to enhance the safety, portability and adaptability of AI systems.

Showcasing Project Results in 2 HiPEAC’25 Workshops

The 2025 HiPEAC conference successfully brought together more than 750 experts in in computer architecture, programming models, compilers and operating systems for general-purpose, embedded and cyber-physical systems. This annual event is a premier forum for...

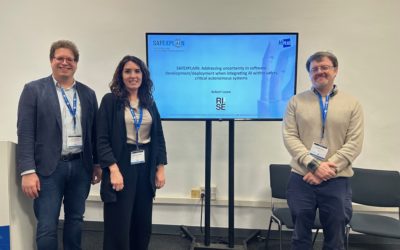

SAFEXPLAIN presents at 22º Automotive SPIN Italia WS

Announcement from the Automotive SPIN ITALIA website The SAFEXPLAIN project will mark its presence at the Automotive SPIN Italia 22º Workshop on Automotive Software & System. Carlo Donzella from partner exida development will share insights into "A Tale of Machine...

Challenges and approaches for the development of Artificial Intelligence (AI)-based Safety-Critical Systems

Jon Perez Cerrolaza from SAFEXPLAIN was invited to give a presentation on the “Challenges and approaches for the development of Artificial Intelligence (AI)-based Safety-Critical Systems” at the Instituto Tecnológico de Informática (ITI). The talk was well received by around 25 researchs from the ITI and the Polytechnic University of Valencia who were interested in the SAFEXPLAIN perspective on AI, Safety & Explainability and Trustworthiness.

SAFEXPLAIN at ERTS 2024

SAFEXPLAIN presented at the 2024 Embedded Real Time System Congress, to be held in Toulouse, France from 11-12 June 2024. Barcelona Supercomputing Center researcher Martí Caro presented “Software-Only Semantic Diverse Redundancy for High-Integrity AI-Based...