Article by: AIKO

Docking with adequate precision

An autonomous Guidance, Navigation and Control (GNC) system is a component of the on-board software designated to navigate a spacecraft. It acquires information from available sensors (cameras, star trackers, inertia measurement units and more), assesses the position and attitude of the spacecraft and performs specific adequate manoeuvres including motion, orbital station-keeping or approaching targets.

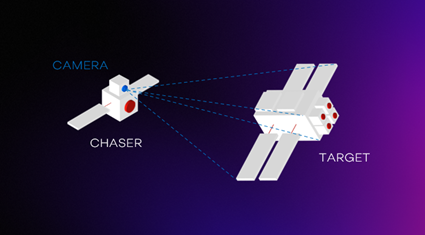

In the context of SAFEXPLAIN, the space scenario envisions a crewed spacecraft performing a docking manoeuvre to an uncooperative target (a space station or another spacecraft) on a specific docking site. The GNC system must be able to acquire the pose estimation of the docking target and of the spacecraft itself, to compute a trajectory towards the target and to send commands to the actuators to perform the docking manoeuvre.

The safety goal is to dock with adequate precision and avoid crashing or damaging the assets. This is especially important as both the spacecraft and the target have a crew, and minor damages to the vehicles may put the crew’s safety at risk. Moreover, approaching the target in an orthogonal way with respect to the docking site is required for safe manoeuvring.

This case study focuses on the pose estimation module, a specific module of the GNC system. This component takes the monocular grayscale camera of the target as input images and must compute its pose, namely the relative position and rotation (three dimensions each) between the target and the chaser. This is a fundamental step for planning a trajectory and for computing actuators’ commands to follow it as part of the GNC pipeline.

Data

Pose estimation is a complex task, and Deep Learning (DL) provides a powerful tool for tackling it. However, DL relies on many aspects, one of which is data. Real datasets are difficult to find, especially for such unusual scenarios, like those involving critical operations and harsh environmental conditions. For this reason, many research projects start their work on synthetic datasets.

Simulated data not only offers unparalleled freedom for generating data itself; it also includes the associated metadata, which is essential for training a DL model. The flexibility provided by simulated data extends to the simulation of extreme or catastrophic conditions, which are crucial for the balanced training of artificial intelligence (AI) models.

Ensuring a comprehensive representation of all possible scenarios, especially critical cases, is fundamental for the models’ inferencing capabilities and their safety.

Pictures comprising a target spacecraft and a background showing deep space and Earth are generated using different perspectives. Lighting conditions are simulated on the spacecraft, and many configurations are available to the user, including camera properties and elements in the images.

Algorithm

The SAFEXPLAIN space case study employs a multitask DL model that specifically uses convolutional neural networks (CNNs) to address the complex challenge mentioned above. This model is designed to process a single input — a simulated camera image of the target satellite — and produce multiple outputs, leveraging the strengths of a multitask framework to enhance the efficiency and accuracy of the task at hand.

The training of the pose estimation multitask model was meticulously executed on a dataset of tens of thousands of images, and results were refined in different phases. Data augmentation and noise addictions were among the techniques employed to enhance the model robustness.

The evaluation of our model’s performance has revealed significant insights into its capability in direct pose estimation, particularly in distinguishing between translation and rotation errors. Translation errors stabilized in the order of centimeters, and rotation errors within a few degrees. Considering that the operational range of distance from the target was between 1 and 20 meters, the results were considered very promising.

After training and testing, the model was converted from its original PyTorch implementation to the Open Neural Network Exchange (ONNX) format. This format facilitates model sharing and deployment across different platforms and frameworks, enhancing the versatility and applicability of our AI solution. First porting tests and executions were carried out and the compatibility with the SAFEXPLAIN platform, the NVIDIA Jetson Orin, was fully assessed, and received good performance of the converted full-branches model.

This marks an important milestone for the project. It represents a ready benchmark case study for applying guidelines, safety patterns and explainability methods that are being developed in the other parts of the project. The next steps will involve fully integrating the space case study into SAFEXPLAIN middleware and endowing the implementation with supervision and monitoring, to ensure a safe and explainable execution of the application.