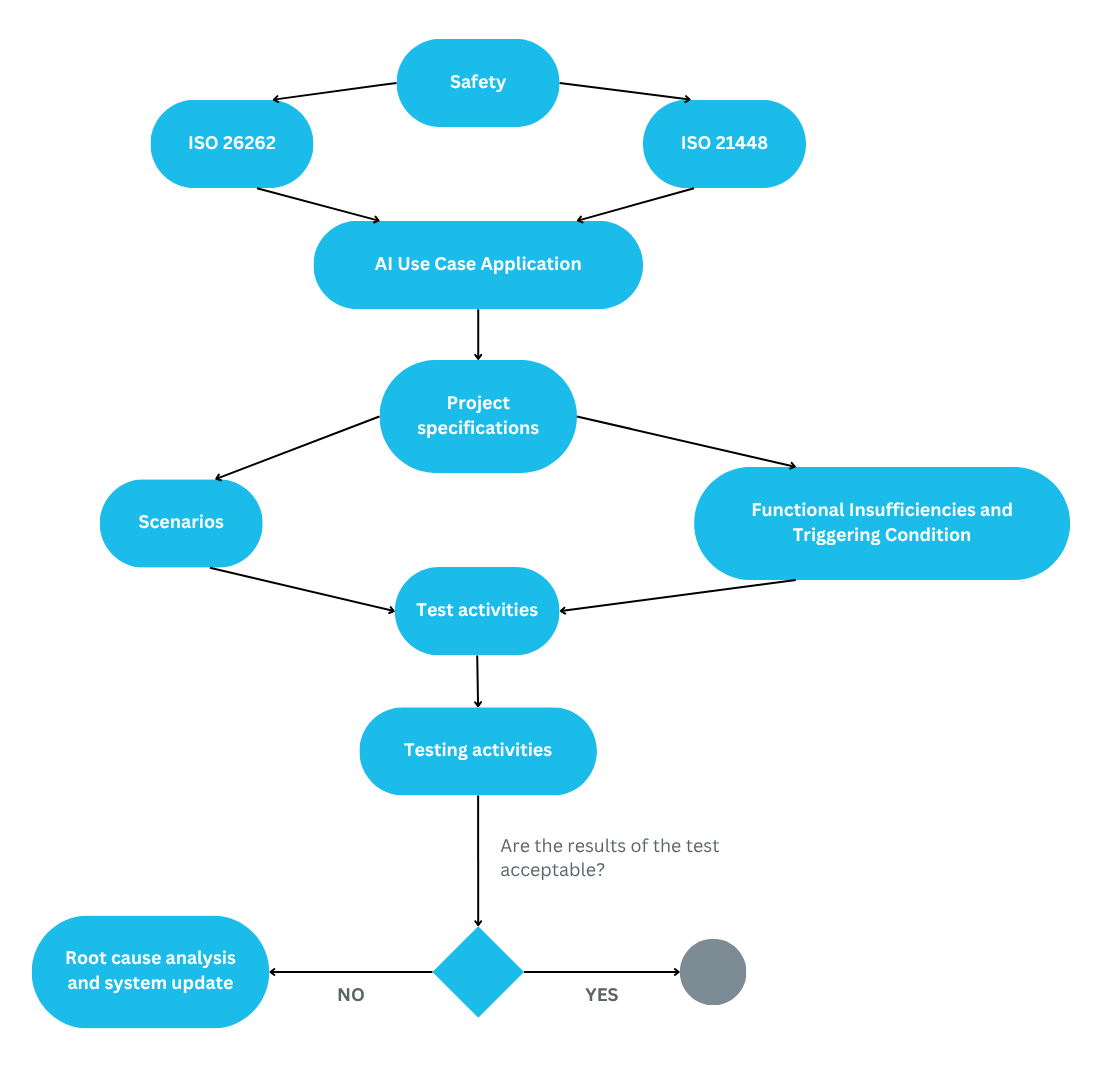

As part of SAFEXPLAIN, Exida has contributed a methodology related to a verification and validation (V&V) strategy of AI-based components in safety-critical systems. The approach combines the two standards ISO 21448 (also known as SOTIF) and ISO 26262 to address the unique challenge posed by AI in real-world applications.

Since the project’s start, a new normative related to AI-based functionalities (ISO/PAS 8800) has been published in the (Dec 2024) integrating key aspects of ISO 26262 and the ISO 21448 and extending them to the aspects directly related to AI.

After a first cross-check, within the scope of our applications, it seems that the SAFEXPLAIN methodology is already compliant to the new standard (further evaluations are undergoing).

Figure 1: V&V strategy

Vehicle & Element Level

The analysis performed in SAFEXPLAIN has two complementary levels: Vehicle and Element Level.

- The vehicle level refers to the system point of view, where the vehicle is considered as an unified entity, hence the analyses refers to all the integrated function (AI-based functions included).

- The element level refers to the sub-component (e.g., logic, sensor, …) point of view. At this level, the analysis is more focused on testing the sub-components’ behavior and trying to detect the failures or initiator that can lead to hazardous behavior.

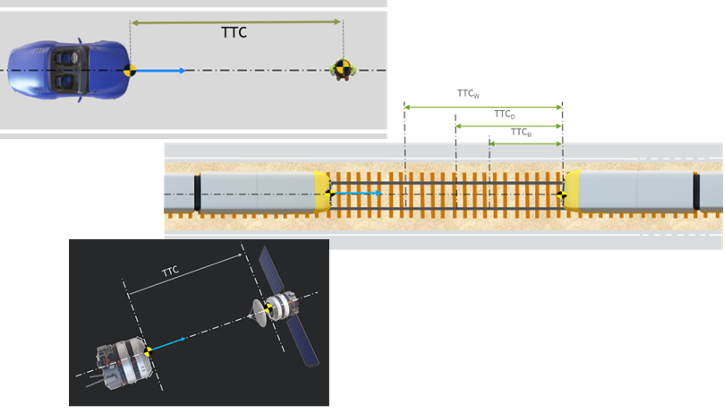

Figure 2: Scenarios created for each use case

V&V strategy application at vehicle level

At the vehicle level, a scenarios catalogue based on the defined specifications was defined to report the relevant driving situation to be verified. Starting from this scenario catalogue, a set of test cases were derived to test the intended functionality.

The V&V strategy described above has been applied in the following use cases:

Automotive (led by NavInfo)

- Related to Autonomous Emergency Braking (AEB) implemented in a car that will be driven in the street.

- It is expected that the AEB will brake the vehicle when a collision risk is present.

Railway (led by IKERLAN)

- Related to Autonomous Train Operation (ATO) implemented in a train (GoA 2) that will travel on the railway.

- It is expected that the ATO will decelerate the train and warn the driver when a collision risk is present (so that they could manually intervene if necessary).

Aerospace (led by Aiko)

- Related to Guidance, Navigation and Control (GNC) implemented in a vehicle that will fly in the space.

- It is expected that the GNC will warn the driver when a docking site is detected and will indicate the path to perform for the docking operation.

V&V strategy application at element level: STPA

Systems Theoretic Process Analysis (STPA) is a safety analysis approach designed for evaluating the safety of complex systems and identifying safety constraints and requirements. STPA is useful for SOTIF because it can address functional insufficiencies, system usage in an unsuitable environment, misuse by persons, etc.

STPA is a methodology that follows precise steps:

- Scope of the analysis: the losses of the functionality which brings to vehicle level hazards are identified;

- Control structure: the action foreseen to control and manage the identified losses are identified;

- Unsafe control actions: the identification of the failure related to the identified control actions;

- Causal scenarios: identification of the functional insufficiencies and triggering conditions as “initiator” which brings to the hazards;

- Improvements and mitigations: improve the system design and derive requirements, the foreseen improvements to mitigate the risk of the hazardous situations

Rethinking Safety for AI

The integration of AI in the mentioned use cases introduces new safety concerns in particular way for the Aerospace:

- Missing of mature standards for the AI application to guarantee the safety on them

- COTS SW not feasible enough for safety situations (e.g., Aerospace Application)