Space Case Study

The space industry has always been a leading sector in technology development and research, pushing for automating operations in harsh environmental conditions and with high safety-critical scenarios. Automating space mission operations can make their management more efficient. It is expected that by implementing a state-of-the-art degree of automation in mission operation, a single operator will be then able to manage tens, if not hundreds of satellites at the same time, providing potential savings in the order of 25% of the total mission costs. The key technology that enables fully autonomous space missions is artificial intelligence (AI).

Space missions require extensively tested and certificated components

Challenge:

The current approach to space mission operations involves extensive use of ground operators to perform activities such as mission planning, telemetry monitoring, payload data analysis and failure mitigation.

State-of-the-art mission autonomy and artificial intelligence technologies can give a relevant boost to enabling fully autonomous operations during space missions.

However, space agencies require extensive testing and certification for components and systems employed in space missions.

This is still a limiting requirement for many techniques that exhibit non-deterministic functioning and little human readability.

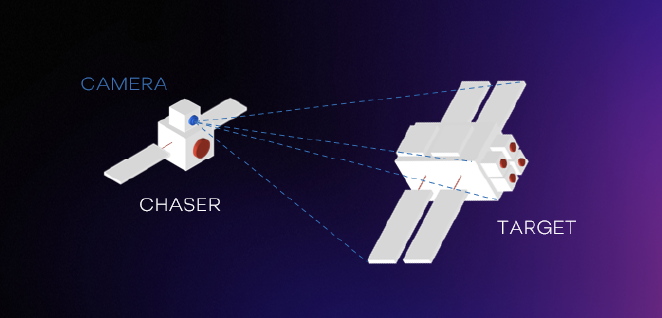

The space case study in SAFEXPLAIN adopts the use of Deep Learning models for visual processing in a rendezvous and docking scenario. The algorithm exploits a vision-based DL algorithm to compute the pose estimation of the target. The task can be particularly challenging as images are captured in grayscale and generally quite low resolution; they are also generated in different conditions of illumination and poses, different backgrounds (space, the Earth, other objects in orbit) and the noise coming from the real optical sensors.

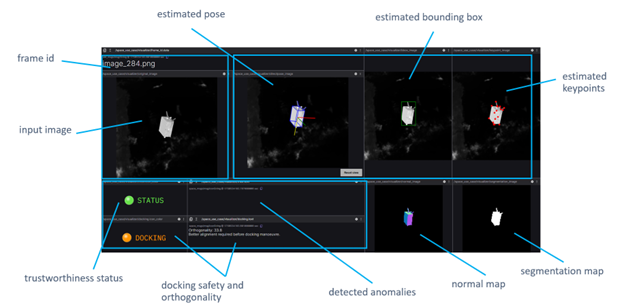

Figure 2 :DL visual processing for satellite rendezvous and docking scenario.

SAFEXPLAIN is helping create a reliable environment for DL

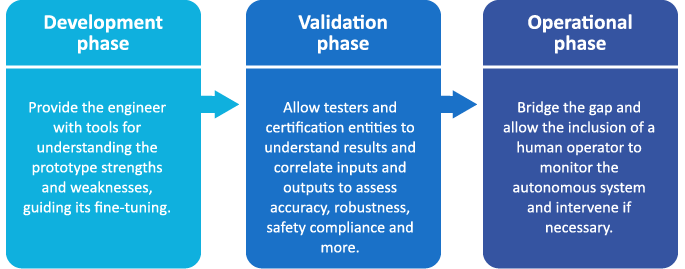

As with other critical domains, the aerospace domain has been reluctant to adopt DL technology. SAFEXPLAIN aims to overcome this reluctance by addressing concerns regarding: the validation of DL and the trustability of DL algorithms for safety-critical applications.

Explainable AI can come to the rescue by enabling comprehensibility as a fundamental tool for the validation of the algorithm results. Explainability techniques are methods for enabling some degree of introspection in the models, which can be of great value in different phases of the algorithm lifecycle.

Explainability techniques help to:

See the final results

Figure 5: Excerpt from the live space demo at the SAFEXPLAIN final event

Read more about our progress in the space case study:

- Gauging requirements and testing models for Space, Automotive and Railway Case Studies

- Safely docking a spacecraft to a target vehicle

- D5.1 Case study stubbing and early assessment of case study porting

- SAFEXPLAIN shares its safety critical solutions with aerospace industry representatives

- Safety for AI-Based Systems

- Core Demo Webinar- Making certifiable AI a reality for critical systems

SAFEXPLAIN shares its safety critical solutions with aerospace industry representatives