The SAFEXPLAIN railway demo comprises an Automatic Train Operation (ATO) system that detects obstacles in the railway and estimates their distance by the assistance of cameras (i.e., right / left cameras). It is based on ROS2 architecture and consists of four main nodes: the video player (or the camera output simulator), the object and track detection node, the stereo depth estimation node and finally the safety function node.

Depending on the obstacle detected (i.e., critical / non-critical), the distance from it and its location (in the track / outside the track), the system responds by warning the driver, activating the service brake, or activating the emergency brake. All in all, the system implements a safety function(s) to minimize the risk of the train running over or injuring people on the track, colliding with obstacles on the track, or injuring passengers on the train itself.

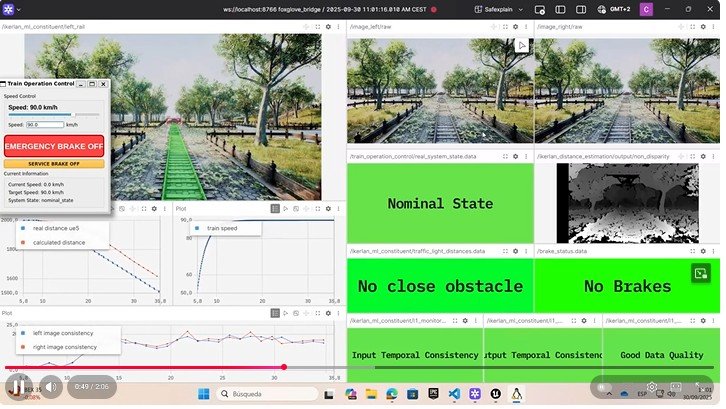

These two videos illustrate the railway obstacle detection and safety response workflow. In both cases the scenario is identical: a train advances along the track toward a car located on the track. The system processes stereo camera inputs, estimates obstacle distance, classifies obstacle location (track vs. platform), and drives safety state transitions which influence the driver interface and target speed.

To be more concrete, the system focuses on a casuistic in which the AI system participates in the safety chain. However, this participation has a low Safety Integrity Level (SIL). The AI system detects an obstacle in line of collision, and it warns the train driver of the potential hazard. Meanwhile, the AI system activates the service brake, thereby reducing the speed of the train. The service brake corresponds to SIL2 safety function. Once the drive has been, they can activate the emergency brake, SIL4, or temporarily deactivate the service brake (SIL2) (see Figure 1).

Figure 1: Rail system implements a safety function(s) to minimize the risk of the train running or injuring people on the track, colliding with obstacles on the track, or injuring passengers on the train itself.

The high-level architecture of the ATO system can be seen in Figure 2 and consists of the following main components:

- Object Detection: Detects objects in the two images given by the left and right cameras and identifies the train tracks.

- Distance Estimation: Using stereo vision, distance is estimated for all the objects detected in the two images given by the left and right cameras.

- Object classification and positioning: Classifies the objects detected by the ‘object and track detection’ module according to their criticality and their position, discarding non-critical objects and objects outside the track.

The depth estimated by the ‘distance estimation’ module is then compared against the defined threshold(s) and warns the driver if an obstacle is detected on the tracks and activates the service brake (SIL2).

Figure 2: High-level architecture of the ATO system

The reference safety architecture pattern previously defined in D2.2 has been adapted to this particular system. In Figure 3, the architecture is displayed together with references to the repositories where the code of these components are stored. These components are explained in detail in the project’s technology repository.

All the information about the rail case study is further explained in the deliverables carried out during SAFEXPLAIN project (SAFEXPLAIN Deliverables). Deliverables D2.2 and D2.4 offer an in-depth explanation of the case study.

Safety Pattern Library

It is important to note that this case study does not contain the l1 monitors developed within SAFEXPLAIN project (Safety Pattern Library) since it is propietary content of IKERLAN.

For more information on the Safety Pattern Library contact:

Irune Agirre Troncoso Team Leader – Dependability and Cybersecurity Methods Cybersecurity and Dependability Division IKERLAN Technological Research Centre Member of the Basque Research & Technology Alliance (BRTA)

+34 943 71 24 00

SAFE YOLO: A MISRA C-Compliant YOLO for Object Detection

The use of AI has become widespread across various domains due to its ability to perform complex functions with high-performance. However, when AI-based systems are employed in safety-related functionalies, it must be ensured that errors do not lead to system malfunctions, as these could result in catastrophic consequences. This represents an open and revelant research field, since existing AI algorithms and execution frameworks were not originally designed with safety considerations in mind. Moreover, critical systems often require certification, yet there are currently no established standards that provide clear guidance on how to ensure the necessary risk reduction when AI is integrated into such systems.

Several research works and emerging initiatives are addressing these challenges. That is the case of standards such as ISO PAS 8800:2024, ISO/IEC TR 5469:2024 and ISO/IEC 5338:2023 which cover different aspects of AI and functional safety or quality assurance, such as A-SPICE.

These emerging standards, such as ISO PAS 8800:2024, require that the processes and tools used in the development of AI models be analyzed to identify, mitigate, and document possible sources of error. In addition, traditional functional safety standards, such as IEC 61508 or ISO 26262, establish a set of requirements that all safety-critical software must fulfill. This gap has motivated our work, which focuses on systematic error prevention within the software frameworks that support AI. Specifically, our aim is to ensure that the software implementation of the AI framework complies with well-established functional safety standards, such as IEC 61508:2012 for industrial applications .

According to Table A.3 of IEC 61508:2012 standard, the use of suitable and strongly typed programming language is Highly Recommended (HR) regardless of the Safety Integrity Level (SIL), while the use of a language subset is also HR for SIL 3 and SIL 4. Based on these recommendations, we consider a C-based AI framework to be highly suitable for safety critical systems, as C is widely used in such domains and can meet these requirements. Unlike unrestricted C, this solution is widely adopted in industry to mitigate potentially dangerous programming errors . This led us to adopt MISRA C guideline, a well-established coding standard, thereby ensuring compliance with the aforementioned requirements.

Given that our work focuses on adapting an AI-based system, we have targeted one of its most relevant functionalities, object detection. To this end, we target YOLOv4, which to the best of our knowledge, is the most recent Darknet-based version written in C and CUDA. Our efforts are primarily directed at mitigating potential programming errors in its C implementation to improve reliability and ensure alignment with functional safety standards.

XAI FOR SAFETY/ EXPLib

The EXPLib library provides explainability for YOLO object detection models through the generation of heatmaps based on Grad-CAM (Gradient-weighted Class Activation Mapping) techniques. Specifically designed for critical autonomous systems such as trains, automobiles, and satellites, the library enables visualization of which image regions contribute most to the model’s predictions, thus facilitating the interpretation of detection decisions. It supports multiple explainability methods including EigenGradCAM, GradCAM, GradCAMPlusPlus, and ScoreCAM, and is optimized to work with both YOLO detection and segmentation models.

The system is capable of processing model outputs both with and without Non-Maximum Suppression applied, calculating Intersection over Union (IoU) metrics to correctly associate each final detection with the corresponding internal activations, which ensures that the generated explanations are precise and specific for each detected object. Additionally, it incorporates a faithfulness metrics system that objectively evaluates the quality of the generated explanations by comparing heatmap intensity values inside and outside the bounding boxes.