The SAFEXPLAIN project has reached an exciting point in its lifetime: the integration of the outcomes of the different partners.

The work related to the case studies began with the preparation of AI algorithms, as well as the datasets required for the trainings and for the simulation of the operational scenarios. In the case of the space case study, this work has resulted in the pose estimation system described here .

Simultaneously, the case studies have counted with support from the partners focused on explainable AI (XAI), safety patterns and platform development.

- XAI experts have been working with case study partners to gather techniques to explain and understand the models and their decisions and are working to make them available in a ready-made library.

- Safety pattern experts have been working to provide safety patterns and related architectures to implement them, along with test scenarios and references for the case studies.

- Platform experts have built a software environment, embedded in the platform, that provides a layer for executing different nodes, monitoring them and configuring resources allocation.

These different intermediate products are now being integrated into a seamless system. This system counts with an AI model that can guarantee safe executions and that is endowed with supervision and diagnostics and enclosed in an embedded middleware and with portability considerations.

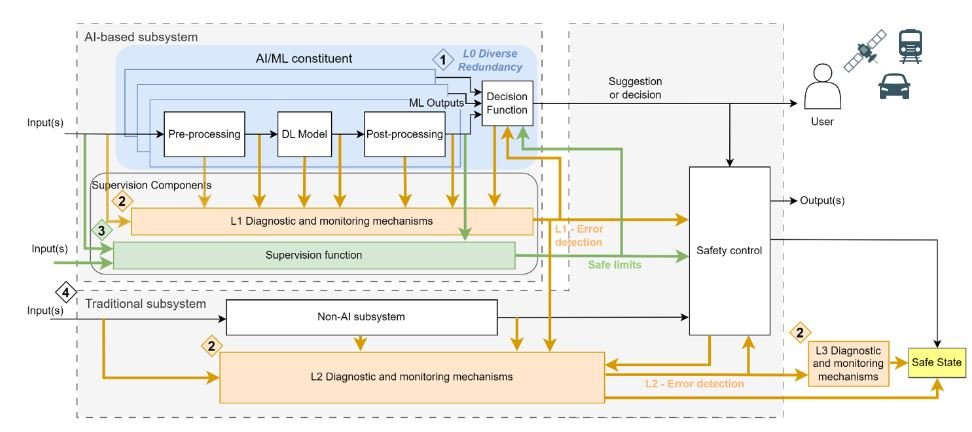

The L1 diagnostics and monitoring components will apply different techniques to monitor the reliability of the execution. The input images flowing in the system will be verified to be temporally consistent, namely that they come in a coherent sequence without excessive lagging or meaningless outliers. The quality of the data will be assessed in another component, ensuring that the input does not harm the correct functioning of the system.

The supervision function will be in charge of verifying that the input, output and the model itself produce meaningful data. An anomaly detector based on a Variational AutoEncoder (VAE) will be able to spot issues, thanks to its ability to reconstruct plausible data and compare it to the data provided by the system. The uncertainty of the model output will be assessed, estimating with which degree the model is certain of its conclusions and hence whether it can be trusted.

The L2 diagnostics and monitoring components will take care of monitoring the health of the different processes and nodes, comprising application nodes, the other diagnostics nodes, supervising and decision nodes.

The decision function will assess the confidence level of the model outputs, comparing results from the redundant AI models (models computing the same task, the pose estimation, in different ways) and ensembling information from diagnostics and supervision.

The safety function will have the last word on the decision function, namely the output confidence, and on the diagnostics outputs, integrating the orthogonality computation, which estimates the degree of alignment of the agent with the docking site. This is a fundamental feature to be considered, as a docking manoeuvre can be successful and safe only with a precise approach. This will allow the safety function to validate the system outputs to the agent or to signal the agent a failure in its execution and the necessity of reverting to a safe mode.