By Javier Fernández, Dependability and Cybersecurity Methods, IKERLAN / Basque Research and Technology Alliance (BRTA)

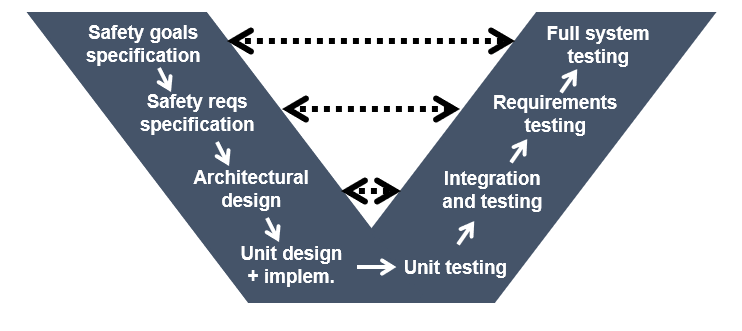

The development of safety-critical systems follows a well-known V-model, moving from safety goals to safety requirements, system architecture design, software and hardware architecture design, and implementation, to obtain a system that is intended to be safe by construction. This is followed by a testing phase that goes from unit testing up until full system testing against the defined safety requirements.

Functional Safety Management (FSM) defines the required systematic approach for developing safety-critical systems and other lifecycle phases, whether it is planning, involved team, activities, documents, technical considerations, configuration management, modification procedures and more, spanning from concept definition to decommissioning and disposal. The main goal of FSM is to ease the definition, organization, and control of the information generated during safety-critical project development while fulfilling the requirements of functional safety standards.

Using AI to perform functionalities that implement safety requirements creates new needs for traditional FSM given that it must adhere to applicable safety development and management processes [1][2][3]. Several challenges emerge as the general AI-based systems development process crashes frontally with traditional safety development processes [1][2][3][4].

Some these challenges include:

- The monolithc design of AI software (SW), which follows empirical training processes that use training data rather than implementing specific safety requirements.

- The inability of AI SW to be considered correct by design, unlike other kinds of SW in safety-critical systems, due to its predictive nature that comes along with mispredictions and confidence values.

- The fact that AI SW is no longer independent of data. Its parameters are set empirically based on training datasets.

- The high-performance demands imposed by AI SW on the underlying HW. Its inherent complexity (both in terms of hardware (HW) and SW) is challenging for safety standards compliance.

Moreover, there is a lack of guidance in the development process for safety-critical systems that incorporate AI.

To address these challenges, SAFEXPLAIN has been developing an AI-FSM that guides the development process, maps the traditional lifecycle of safety-critical systems with the AI lifecycle, and addreses their interactions. Figure 2 demonstrates the work being done in this regard.

Figure 2: SAFEXPLAIN-developed FSM methodology including AI lifecycles.

This proposed AI-FSM extends widely adopted FSM methodologies that stem from functional safety standards to the the specific needs of Deep Learning architecture specifications, data, learning, and inference management, as well as appropriate testing steps. The SAFEXPLAIN-developed AI-FSM considers recommendations from IEC 61508 [5], EASA [6], ISO/IEC 5460 [3], AMLAS [7] and ASPICE 4.0 [8], among others.

The AI-FSM has received positive feedback in technical assessments by internal (EXIDA) and external (TÜV Rheinland) certification bodies. Overall, the assessments concluded that the safety considerations described in the AI-FSM are suitable for future certifiability.

Want to know more about AI-FSM?

- Learn about our January 2024 review meeting with TÜV Rheinlad here.

- Find more details on the AI-FSM by checking out IKERLAN´s presentation at the 2024 Critical Automotive applications: Robustness & Safety (CARS) workshop.

References

| [1] | J. Perez-Cerrolaza et al., “Artificial Intelligence for Safety-Critical Systems in Industrial and Transportation Domains: A Survey,” ACM Comput. Surv., 2023. |

| [2] | ISO/AWI PAS 8800 Road Vehicles — Safety and artificial intelligence, Under Development. |

| [3] | ISO/IEC TR 5469 – Artificial intelligence – Functional safety and AI systems, Geneva, Under development. |

| [4] | J. Abella et al., “On Neural Networks Redundancy and Diversity for Their Use in Safety-Critical Systems,” Computer, vol. 56, pp. 41-50, 2023. |

| [5] | IEC 61508(-1/7): Functional safety of electrical / electronic / programmable electronic safety-related systems, 2010. |

| [6] | European Union Aviation Safety Agency (EASA), “EASA Concept Paper: guidance for Level 1 & 2 machine learning applications,” 2023. |

| [7] | R. Hawkins et al., “Guidance on the Assurance of Machine Learning in Autonomous Systems (AMLAS),” 2021. |

| [8] | “Automotive SPICE® Process Assessment / Reference Model Version 4.0,” 2023 |