Written by Robert Lowe & Thanh Bui, Humanized Autonomy Unit, RISE, Sweden.

Machine Learning life cycles for data science projects that deal with safety critical outcomes require assurances of expected outputs at each stage of the life cycle for them to be evaluated (and certified) as safe. Explainable Artificial Intelligence (XAI) algorithms applied at each stage can potentially allow for such assurances.

XAI refers to methods and techniques in the application of AI such that the results of the solution can be understood by human experts. XAI seeks to make comprehensible and interpretable “black box” algorithms such as in Deep Learning models. But explanations are needed for different types of users – what is comprehensible to a software developer may not be comprehensible to an operational user (e.g. a driver of a semi-autonomous vehicle who is required to make decisions based on the output of a “black box” AI model).

On this basis, as part of the SAFEXPLAIN project, researchers at RISE in collaboration with IKERLAN, BSC and the other consortium partners, are investigating ways to apply XAI algorithms, with different levels of explanation, at each phase of the Machine Learning life cycle.

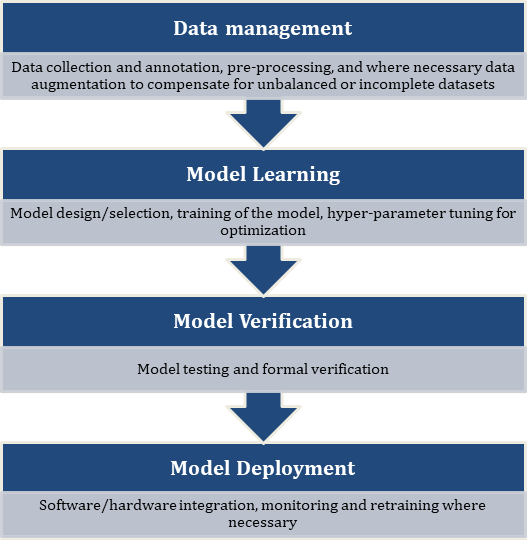

This cycle typically consists of the following core stages:

Not only can XAI help stakeholders increase understanding of decisions made by AI algorithms but insights provided by the XAI algorithms at each stage of the machine learning lifecycle can support model development, deployment, and ongoing maintenance so as to increase the quality of the underlying AI model.

In SAFEXPLAIN, RISE and other consortium members are investigating the state of the art of XAI and which types are most suitable for each stage of the lifecycle and the corresponding users. We are looking into methods for allowing XAI to direct the processing from one stage of the cycle to the next (or to track back to previous stages if satisfaction criteria are not met). The goal is to find an approach that allows for functional safety assurance (FUSA) to be met with respect to the automotive, rail and space case studies examined on the project.

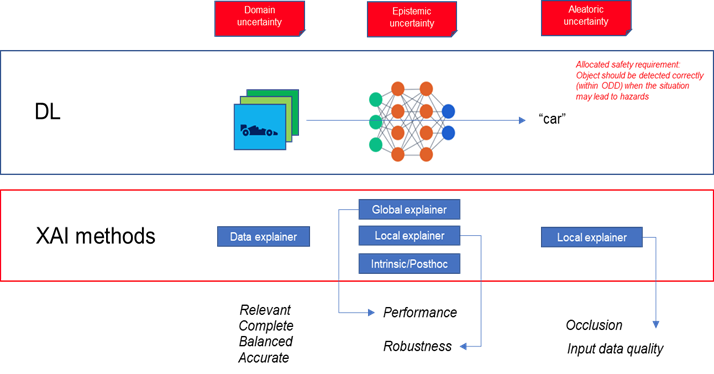

Fig. 2 provides an example of the ML-XAI integrated lifecycle. Data instances are presented to the model and evaluated by an XAI “Data explainer”. This stage of the life cycle (Data Management stage) requires evaluations of the data with respect to the domain task (e.g. prediction of specific objects in semi-autonomous vehicles in specific weather conditions).

The dimensions of these evaluations are: i) relevance (is the data domain relevant?), ii) completeness (all relevant data is present?), iii) balance (the data distribution is appropriate to the domain task?), and iv) accuracy (the data accurately reflects reality?). XAI can mitigate Domain uncertainty, i.e. the problem for datasets to accurately represent the domain task. The performance of the trained neural network (Model Learning/Model Verification stages) is then subject to Epistemic uncertainty, which concerns whether the model design (degree of training, or hyperparameter tuning) of the model is sufficient for modelling the domain task. XAI approaches can shed light on this using a variety of “explainer” types/methods, each of which, in turn, depends on the accuracy of the dataset.

Finally, during deployment, the model’s performance can be subject to alleatoric uncertainty within the operational design domain, which concerns the stochastic nature of the domain task. Such stochasticity can concern vehicle sensor limitations/faults, ambiguity/occlusions of objects (making them difficult even for humans to annotate). Local explainers can be used to locate feature-level influences on inaccurate predictions based on occlusions or more generally hard-to-categorize objects, e.g. is the object a “car” or is it a “van”? Such information can help domain experts understand what classes of data are required to augment the original dataset (Data Management stage) to complete the ML-XAI cycle.