News

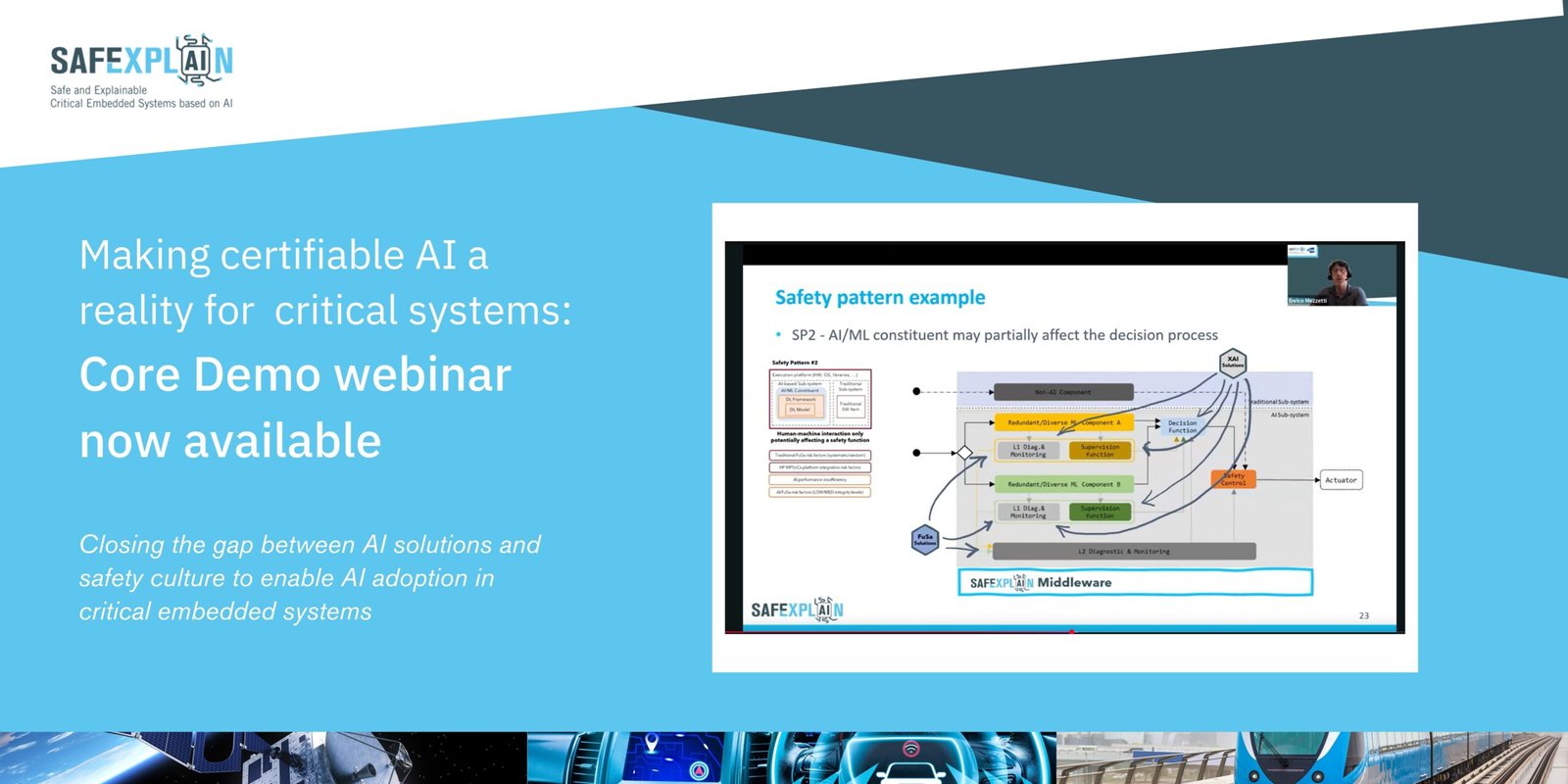

PRESS RELEASE: SAFEXPLAIN Unveils Core Demo: A Step Further Toward Safe and Explainable AI in Critical Systems

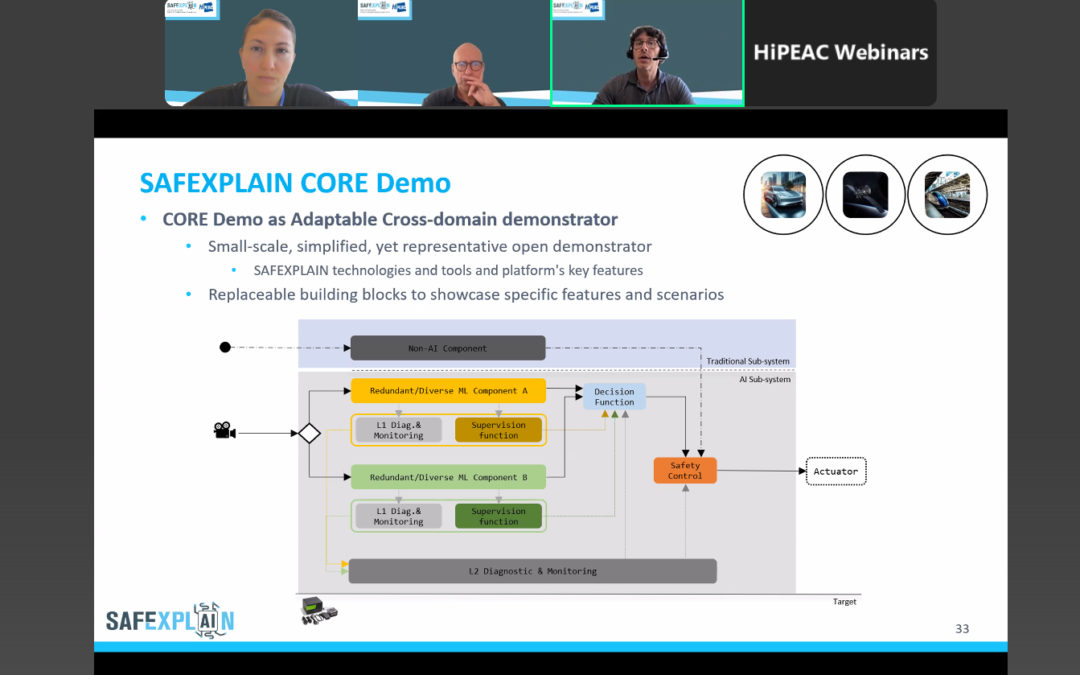

Barcelona, 03 July 2025 The SAFEXPLAIN project has just publicly unveiled its Core Demo, offering a concrete look at how its open software platform can bring safe, explainable and certifiable AI to critical domains like space, automotive and rail. Showcased in a...

Core Demo Webinar- Making certifiable AI a reality for critical systems

Now available!

The project’s Core Demo is a small-scale, modular demonstrator that highlights the platform’s key technologies and showcases how AI/ML components can be safely integrated into critical systems.

Showing SAFEXPLAIN Results in Action at ASPIN 2025

The 23° Workshop on Automotive Software & Systems, hosted by Automotive SPIN Italia on 29 May 2025 proved to be a very successful forum for sharing SAFEXPLAIN results. Carlo Donzella from exida development and Enrico Mezzetti from the Barcelona Supercomputing...

Tackling Uncertainty in AI for Safer Autonomous Systems

Within the SAFEXPLAIN project, members of the Research Institues of Sweden (RISE) team have been evaluating and implementing components and architectures for making AI dependable when utilised within safety-critical autonomous systems. To contribute to dependability...

SAFEXPLAIN shares its safety critical solutions with aerospace industry representatives

On 12 May 2025, the SAFEXPLAIN consortium presented its latest results to representatives of several aerospace and embedded system industries including Airbus DS; BrainChip, the European Space Agency (ESA), Gaisler, and Klepsydra, showcasing major strides in making AI...

SAFEXPLAIN Update: Building Trustworthy AI for Safer Roads

For enhanced safety in AI-based systems in the railway domain, the SAFEXPLAIN project has been working to integrate cutting-edge simulation technologies with robust communication frameworks. Learn more about how we’re integrating Unreal Engine (UE) 5 with Robot Operating System 2 (ROS 2) to generate dynamic, interactive simulations that accurately replicate real-world railway scenarios.

Enhancing Railway Safety: Implementing Closed-Loop Validation with Unreal Engine 5 and ROS 2 Integration

For enhanced safety in AI-based systems in the railway domain, the SAFEXPLAIN project has been working to integrate cutting-edge simulation technologies with robust communication frameworks. Learn more about how we’re integrating Unreal Engine (UE) 5 with Robot Operating System 2 (ROS 2) to generate dynamic, interactive simulations that accurately replicate real-world railway scenarios.

Case Studies Update: Integrating XAI, Safety Patterns and Platform Development

The SAFEXPLAIN project has reached an exciting point in its lifetime: the integration of the outcomes of the different partners.

The work related to the case studies began with the preparation of AI algorithms, as well as the datasets required for the trainings and for the simulation of the operational scenarios. Simultaneously, the case studies have counted on support from the partners focused on explainable AI (XAI), safety patterns and platform development.

BoF workshop at Future Ready Solutions Event — Trustworthy AI: main innovations and future challenges

Project coordinator Jaume Abella from the Barelona Supercomputing Center represented the project at the Birds of a Feather session, “TrustworthyAI Cluster: Main innovations and future challenges” together with cluster siblings EVENFLOW, TALON and ULTIMATE.

BSC Webinar Demonstrates Interoperability of SAFEXPLAIN Platform Tech & Tools

The third webinar in the SAFEXPLAIN webinar series will share the novative infrastructure behind the AI-FSM and XAI methodologies. Participants will gain insights into the integration of the proposed solutions and how they are designed to enhance the safety, portability and adaptability of AI systems.