Written by Robert Lowe & Thanh Bui, Human-Centered AI, RISE, Sweden

As part of the SAFEXPLAIN project, researchers at RISE have been investigating how Deep Learning algorithms can be made dependable, i.e., functionally assured in critical systems like cars, trains and satellites.

SAFEXPLAIN partner IKERLAN has worked to create a Functional Safety Management lifecycle for AI (AI-FSM) as well as recommended safety pattern(s) that take into account AI in a systematic approach for developing safety-critical systems. In collaboration with IKERLAN and the other members of the SAFEXPLAIN consortium, RISE has been working on establishing principles for ensuring that the DL components, together with required explainable AI (XAI) supports, comply with the guidelines set forth by AI-FSM and the safety pattern(s).

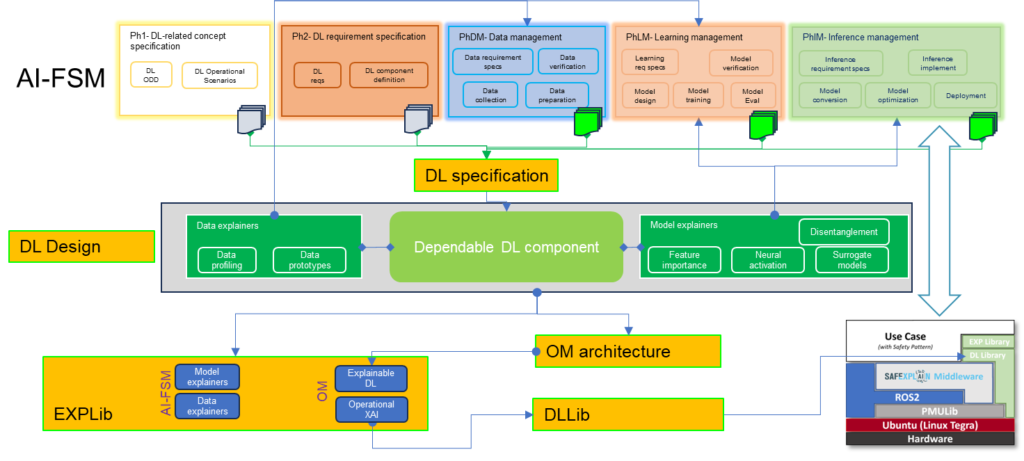

These explainability principles are required at each phase of the AI-FSM assurance process lifecycle, specifically concerning the Data Management phase (PhDM), the Learning Management phase (PhLM), and the Inference Management phase (PhIM). Figure 1 illustrates the AI-FSM lifecycle (top) and the related specifications for the Deep Learning component to be dependable (DL specification). The DL design thereby requires Data explainers (to explain captured/prepared datasets, address domain uncertainties) and Model explainers (to explain the trained DL model, address model epistemic uncertainties).

RISE is also developing the Explainable Deep Learning Library (EXPLib) to provide a means to implement functions and methods for enabling compliance with AI-FSM proposed guidelines (bottom of Figure 1). The EXPLib (under development) contains several functions designed to support verification, diagnosis and evidence generation to ensure compliance with AI-FSM.

Extracted explanations (leveraging explainable AI techniques) are needed at different phases of the lifecycle. EXPLib interfaces with DLLib (see Figure 1 bottom) to provide runtime components to support the deployment of safety pattern(s) on the selected platform. RISE focuses on two key aspects on how Deep learning components must address uncertainty, as well as explainability, in the safety critical scenarios that the SAFEXPLAIN use cases (railway, automotive, space) may encounter.

Uncertainty entails i) domain uncertainty, where datasets do not adequately reflect their real-world counterparts, ii) epistemic uncertainty, where models may not capture sufficient knowledge/representations of the world they are designed to predict, and iii) aleatoric uncertainty, where inherent noise in the data (e.g. with respect to occlusions, or sensor noise) may not be eliminated. Processes are currently being tested for systematically reducing these forms of uncertainty during the development lifecycle and for controlling the residual uncertainty during the operation and monitoring stage.

RISE’s work on Explainable AI undertaken as part of the SAFEXPLAIN project will be discussed in greater detail as part of the HiPEAC-hosted webinar Explainable AI for systems with Functional Safety Requirements on 23 October. More information on the webinar can be found here.

This article is an update on the RISE article prepared in May 2023, available here.