Barcelona, 13 February 2023. – The EU-funded SAFEXPLAIN (Safe and Explainable Critical

Embedded Systems based on AI) project, launched on 1 October 2022, seeks to lay the foundation

for Critical Autonomous AI-based Systems (CAIS) applications that are smarter and safer by

ensuring that they adhere to functional safety requirements in environments that require quick

and real-time response times that are increasingly run on the edge. This three-year project brings

together a six-partner consortium representing academia and industry.

AI technology offers the potential to improve the competitiveness of European companies and

the AI market itself is expected to reach $191 billion by 2024 in response to companies’ growing

demand for mature autonomous and intelligent systems. CAIS are becoming especially ubiquitous

in industries like rail, automotive and space, where the digitization of CAIS offers huge benefits

to society, including safer roads, skies and airports through the prevention 90% of collisions per

year and the reduction of up to 80% of the CO2 profile of different types of vehicles.

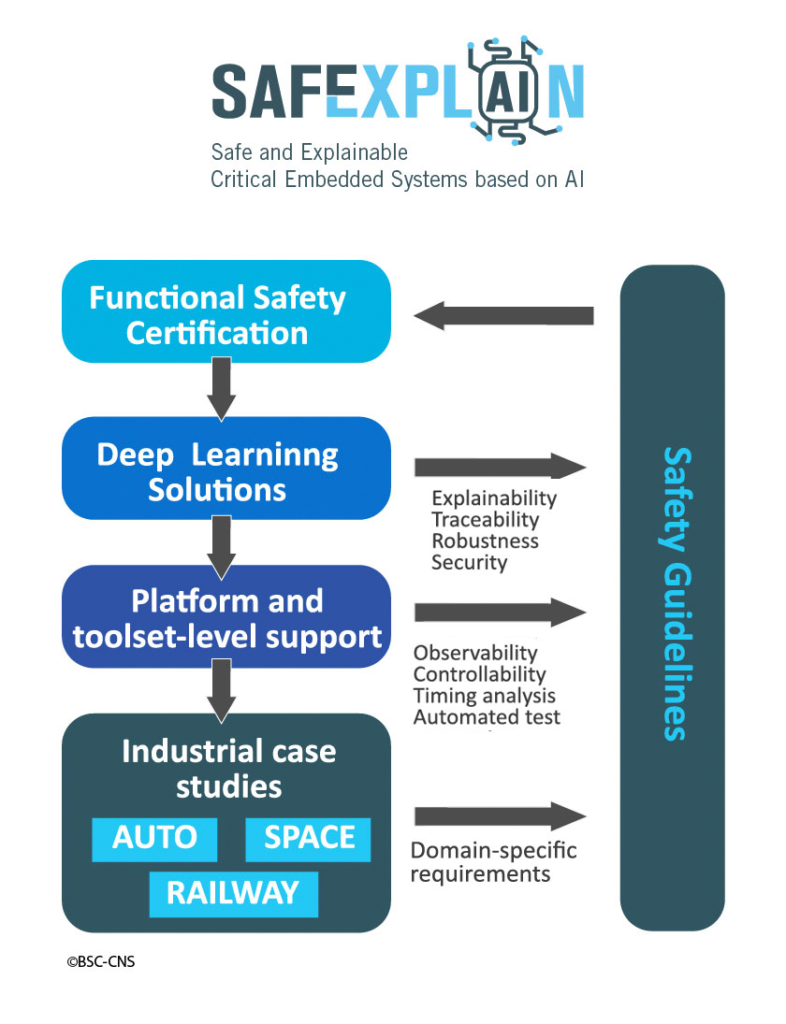

Deep Learning (DL) technology that supports AI is

key for most future advanced software functions in

CAIS, however, there is a fundamental gap between

its Functional Safety (FUSA) requirements and the

nature of DL solutions. The lack of transparency

(mainly explainability and traceability) and the data-

dependent and stochastic nature of DL software

clash with the need for clear, verifiable and pass/fail

test-based software solutions for CAIS. SAFEXPLAIN

tackles this challenge by providing a novel and

flexible approach for the certification – and hence

adoption – of DL-based solutions in CAIS.

Jaume Abella, SAFEXPLAIN coordinator, highlights

that “this project aims to rethink FUSA certification

processes and DL software design to set the

groundwork for how to certify DL-based fully

autonomous systems of any type beyond very

specific and non-generalizable cases existing today.”

Three cases studies will illustrate the benefits of SAFEXPLAIN technology in the automotive,

railway and space domains. Each domain has its own stringent safety requirements set by their

respective safety standards, and the project will tailor automotive and railway certification

systems and space qualification approaches to enable the use of new FUSA-aware DL solutions.

To benefit wider groups of society, the technologies developed by the project will be integrated

into an industrial toolset prototype. Various IP and implementations will be available open source,

along with specific practical examples of their use to grant end-users the tools to develop those

applications.

About Safexplain

The SAFEXPLAIN (Safe and Explainable Critical Embedded Systems based on AI) is a HORIZON

Research and Innovation Action financed under grant agreement 101069595. The project began

on 1 October 2022 and will end in September 2025. The project is formed by an inter-disciplinary

consortium of six partners coordinated by the Barcelona Supercomputing Center (BSC). The

consortium is composed of three research centers, RISE (Sweden; AI expertise), IKERLAN (Spain;

FUSA and railway expertise) and BSC (Spain; platform expertise) and three CAIS industries,

NAVINFO (Netherlands; automotive), AIKO (Italy; space), and EXIDA DEV (Italy; FUSA and

automotive)