Contribution by Ikerlan

SAFEXPLAIN’s railway case study is based on Automatic Train Operation. Project partner Ikerlan is in charge of this case and is working to check the viability of a safety architectural pattern. This safety architectural pattern will be composed of artificial vision systems elements that serve as “sensors” that feed information safety-related software elements.

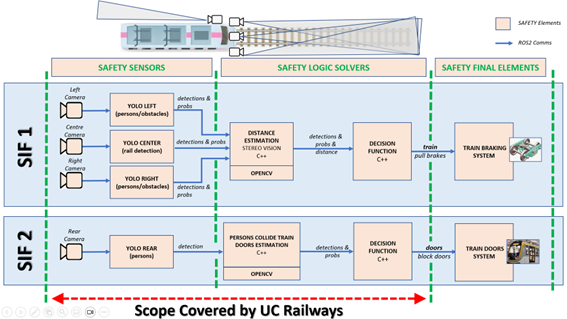

The case study will implement two safety functions. It will: (1) minimize the risk of the train running over or injuring people on the track, colliding with obstacles on the track, or hurting a passenger in the train itself because of one of the previous situations, and (2) minimize the risk of train doors injuring passengers during opening/closing operations on the platform. Figure 1 shows the high-level SW architecture of the safety functions.

Closing the gap: through two scenarios

The challenge faced by the railway case study is closing the gap between Functional Safety Requirements and the nature of Deep Learning (DL) solutions. Functional Safety systems need deterministic, verifiable and pass/fail test-based software solutions. However, DL-based solutions that allow for greater complexity lack explainability and traceability. They heavily depend on data and exhibit a stochastic nature in software through their lack of robustness, security and fault-tolerance.

- The first scenario on Safety Function 1 (brake activation) consists of a simulated autonomous train circulating on a track with a front stereo camera and a centre camera (left/centre/right) that captures the front and pairs of images at least 10 frames per second. In this scenario people/obstacles may appear on the track. The specified maximum speed of the train, light c onditions for the cameras, maximum distance of detection, among others, are still being defined. Pre-recorded videos/images will be used for the case study.

- The second scenario on Safety Function 2 (train doors blocking) consists of an autonomous train that stops at the station platform and needs to open/close the doors safely. Light conditions for the cameras, etc still need to be specified. Pre-recorded videos/images will be used for the case study.

AI explainability techniques studied in SAFEXPLAIN may help during the process of safety system certification by proposing a body of evidence that helps to demonstrate to the certification authorities that AI based sensors are properly trained and work as expected.