by Janine Marie Gehrig Lux | Feb 3, 2026 | Uncategorized

The project’s final 11 deliverables are now available. Access the project’s final results. The SAFEXPLAIN deliverables provide key details about the project and its final outcomes. The following deliverables have been completed for public dissemination and...

by Janine Marie Gehrig Lux | Nov 24, 2025 | Uncategorized

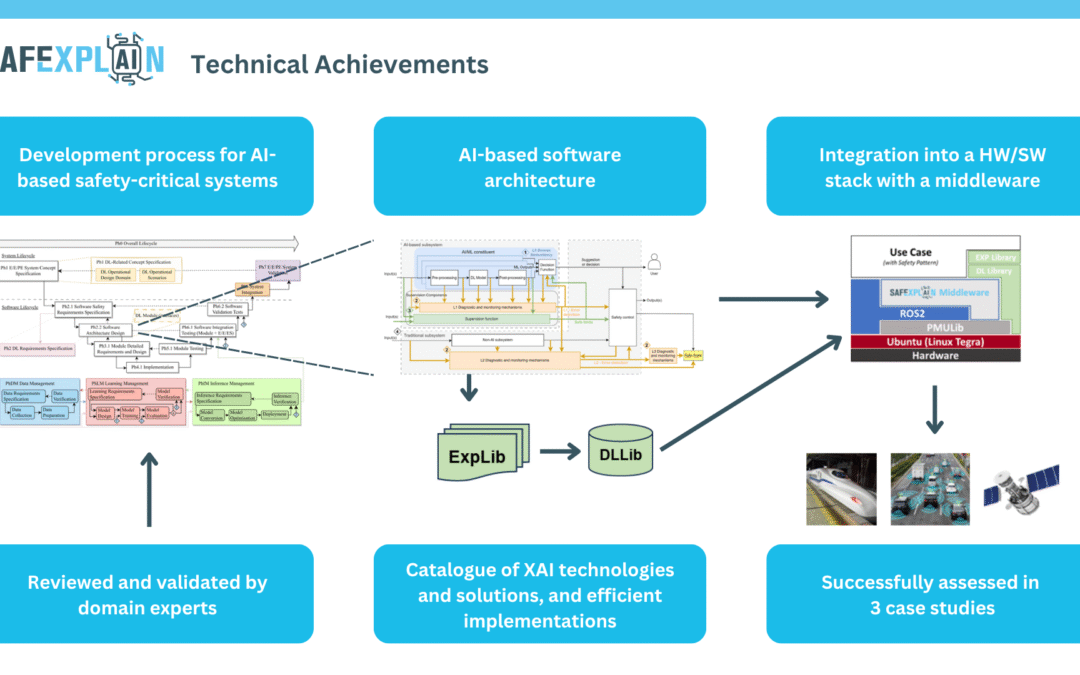

After three years of research and collaboration, the EU-funded SAFEXPLAIN project, coordinated by the Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS) has released its final results: a complete, validated and independently assessed...

by Janine Marie Gehrig Lux | Nov 5, 2025 | Uncategorized

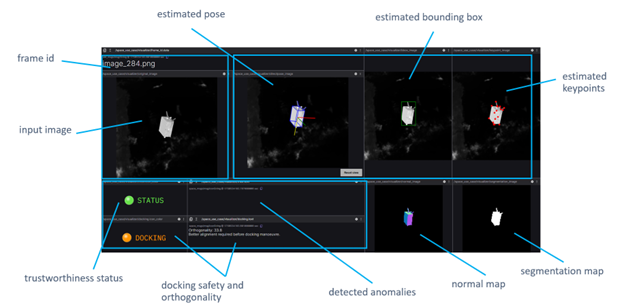

The space case study takes place in a scenario with high relevance for the space industry, a spacecraft agent navigating towards another satellite target and attempting a docking manoeuvre. This is a typical situation of an In-Orbit Servicing mission. The case study...

by Janine Marie Gehrig Lux | Nov 5, 2025 | Uncategorized

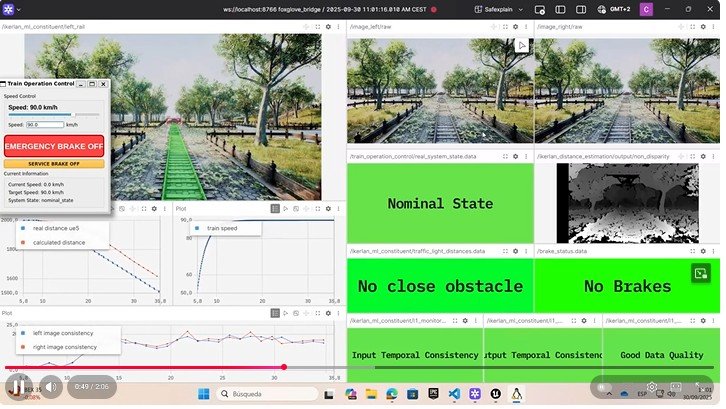

The SAFEXPLAIN railway demo comprises an Automatic Train Operation (ATO) system that detects obstacles in the railway and estimates their distance by the assistance of cameras (i.e., right / left cameras). It is based on ROS2 architecture and consists of four main...

by Janine Marie Gehrig Lux | Oct 14, 2025 | Uncategorized

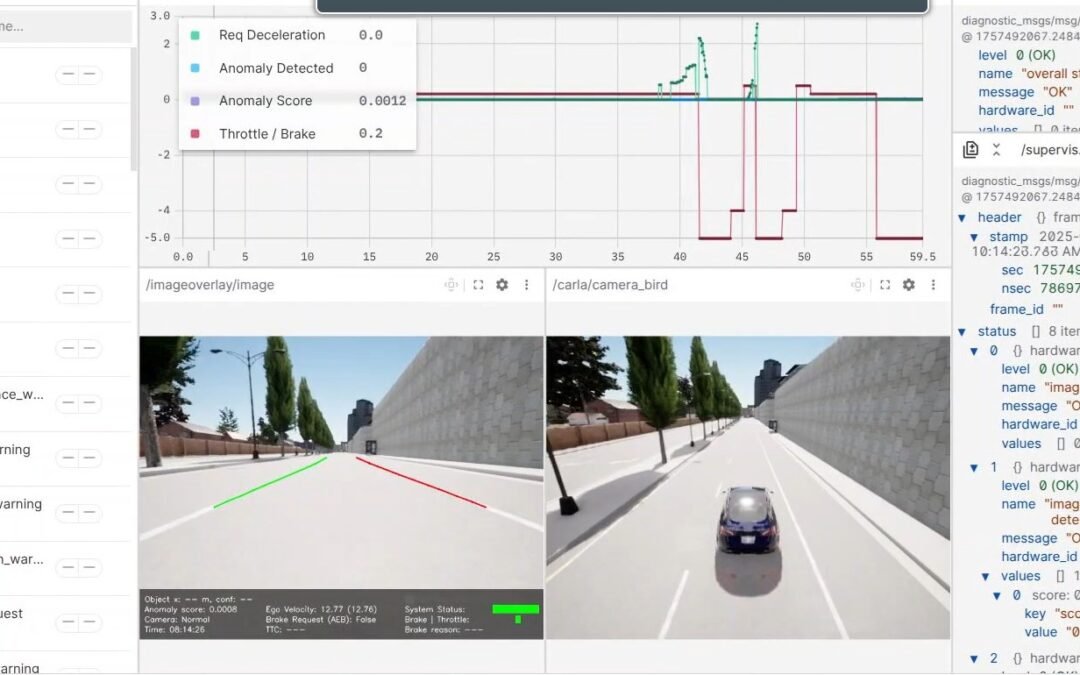

The final phase of the automotive case study focused on building and validating a ROS2-based demonstrator that implements safe and explainable pedestrian emergency braking. Our architecture integrates AI perception modules (YOLOS-Tiny pedestrian detector, lane...

by Janine Marie Gehrig Lux | Oct 8, 2025 | Uncategorized

On 23 September 2025, members of the SAFEXPLAIN consortium joined forces with fellow European projects ULTIMATE and EdgeAI-Trust for the event “Trustworthy AI in Safety-Critical Systems: Overcoming Adoption Barriers.” The gathering brought together experts from...

Recent Comments