News

EV community at Innovex 24 welcomes presentation by SAFEXPLAIN

SAFEXPLAIN partner, Carlo Donzella from exida development, opens EV session with key note on "Enabling the Future of EV with TrustworthyAI" June 6, 2024 marked an important opportunity for the SAFEXPLAIN project to share project results with key audiences from the...

Successful showcase of SAFEXPLAIN use cases at Trustworthy AI webinar

SAFEXPLAIN partner Enrico Mezzeti from the Barcelona Supercomputing Center joined 8 other Horizon Europe-funded projects under call HORIZON-CL4-2021-HUMAN-01-01to present the project´s work on TrustworthyAI and its implications for its use cases. The nine projects,...

A Tale of Machine Learning Process Models at Automotive SPIN Italia

Carlo Donzella from exida development presents at the Automotive SPIN Italia 22º Workshop on Automotive Software & System SAFEXPLAIN partner Carlo Donzella, from exida development, presented at the Automotive SPIN Italia 22º Workshop on Automotive Software &...

SAFEXPLAIN seeks synergies within TrustworthyAI Cluster

Representatives of the coordinating teams of SAFEXPLAIN and ULTIMATE met to share progress, lessons learnt, and look for potential opportunities for synergies. They delved deeper into the issues that concern both projects: TrustworthyAI.

Safely docking a spacecraft to a target vehicle

The space scenario envisions a crewed spacecraft performing a docking manoeuvre to an uncooperative target (a space station or another spacecraft) on a specific docking site. The GNC system must be able to acquire the pose estimation of the docking target and of the spacecraft itself, to compute a trajectory towards the target and to send commands to the actuators to perform the docking manoeuvre. The safety goal is to dock with adequate precision and avoid crashing or damaging the assets.

Halfway through the project, RISE hosts consortium in Lund

SAFEXPLAIN consortium meets halfway through the project at RISE venue in Lund With the first 18 months of the project behind it, the SAFEXPLAIN consortium met in Lund from 16-17 April to discuss project status and next steps for the next 18 months. Great strides have...

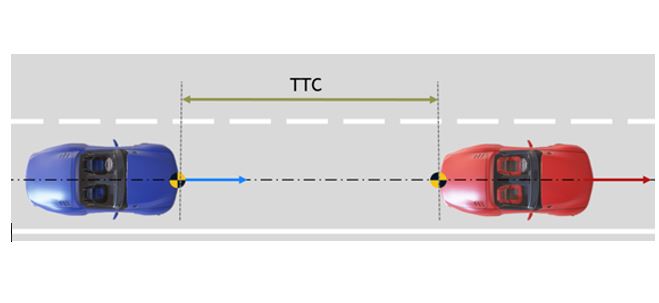

Exploring AI-specific redundancy patterns

AI-generated image of object detection for automotive Artificial intelligence, and more specifically, Deep Learning algorithms are used for visual perception classification tasks, like camera-based object detection. For these tasks to work, they need to identify the...

SAFEXPLAIN Opens CARS WS and Shares Work on AI-FSM

Jon Perez Cerrolaza presenting the CARS WS keynote The SAFEXPLAIN project opened the 8th edition of the Critical Automotive applications: Robustness & Safety (CARS) workshop on 8 April 2024, with a keynote talk, delivered by Ikerlan partner Jon Perez-Cerrolaza on...

SAFEXPLAIN Reaches out to industry at MWC24

Figure 1: Project coordinator, Jaume Abella, at the BSC booth at MWC24 The 2024 Mobile World Congress in Barcelona offered the SAFEXPLAIN project the opportunity to meet key industry players from the global mobile ecosystem. Moreover, it granted the project partner...

Exida in SAFEXPLAIN: Extending Functional Safety Compliance to Machine Learning Applications, NOW

Exida is part of two technical pillars that are associated with two major work-packages (WP) of the project: WP2- Safety Assessment and WP4 -Platforms and Toolset Support. The intermediate results of which are presented in this text.