Dr Robert Lowe, Senior Researcher in AI and Driver Monitoring Systems from the Research Institutes of Sweden discussed the integration of explainable AI (XAI) algorithms into the machine learning (ML) lifecycles for safety-critical systems, i.e., systems with functional safety requirements in a HiPEAC-hosted webinar on 23 October 2024. This webinar provided an overview of the complexities related to XAI with regard to functional, transparency and compliance with safety standards.

Project coordinator, Dr Jaume Abella, opened the webinar by providing the context in which this research is being conducted within the framework of the EU-funded SAFEXPLAIN project.

Dr Lowe took over with a comprehensive explanation of how XAI is being used to support the AI-Functional Safety Management methodology created by IKERLAN and a walkthrough of explainable AI for systems with functional safety requirements.

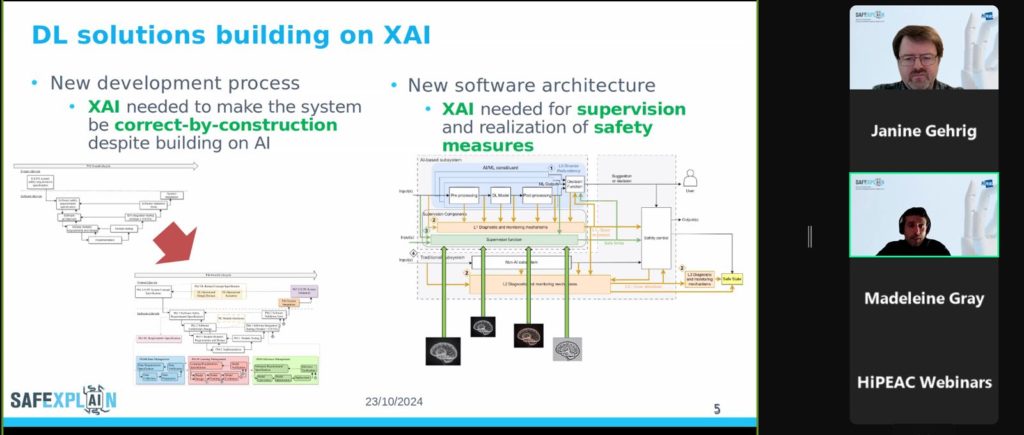

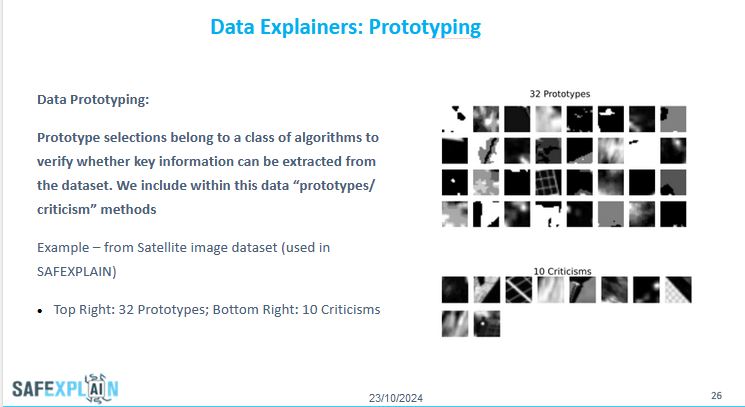

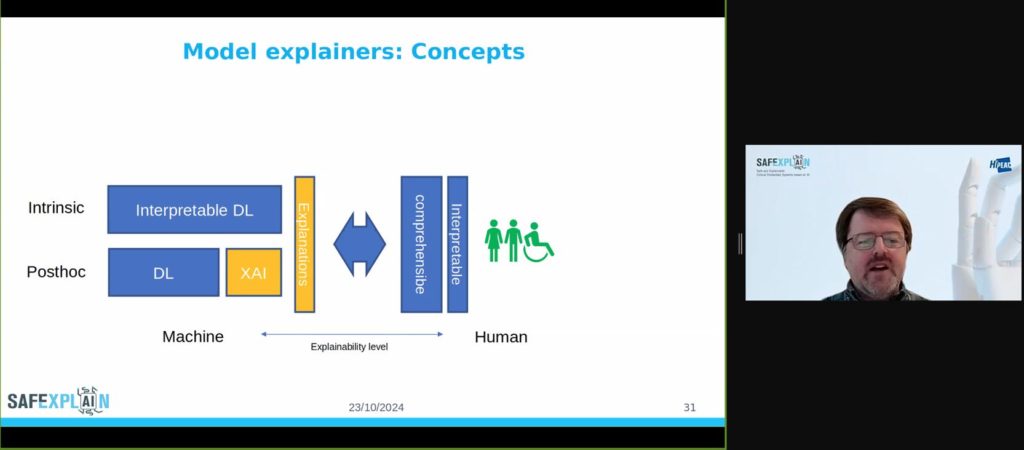

A key aspect of the webinar included an introduction to the SAFEXPLAIN “explainability by design” approach. This approach seeks to provide systematic approach to the integration of XAI within AI-based systems and the ML lifecycle, especially in domains where safety is non-negotiable. The role of data and models explainers were introduced.

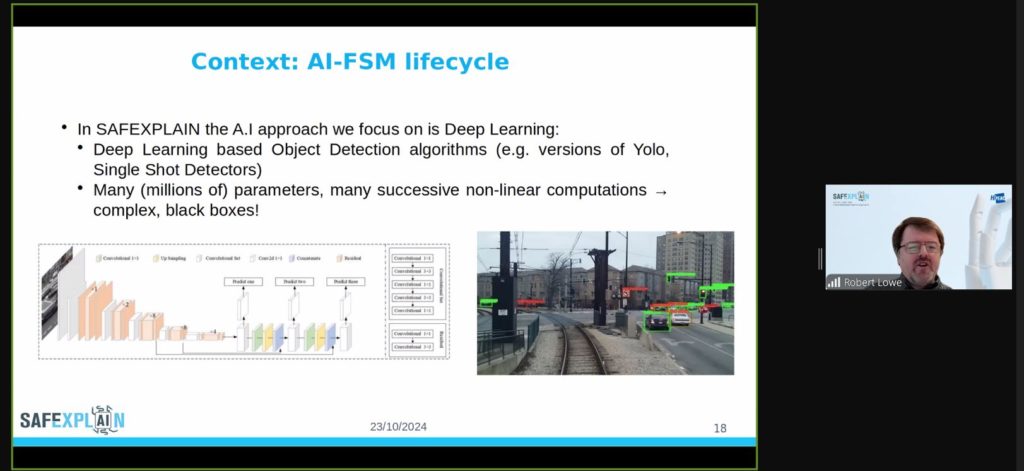

After developing these concepts and their use within the project’s research, Dr Lowe provided a walkthrough of Explainable AI for systems with functional safety requirements, specifically making DL compponents dependable within the AI-FSM lifecycle, deploying DL components in compliance with safety patterns and DL libraries.

Want to know more?

Did you miss our first webinar on AI-Functional Safety Methodology Management? Watch it here.

Learn more about the speaker

Dr Robert Lowe is a researcher in Artificial Intelligence and Driver Monitoring Systems at the Research Institutes of Sweden (RISE AB). He is also Associate Professor (Docent) at the University of Gothenburg, Department of Applied IT, in Cognitive Science.

Dr Lowe joined RISE in 2023 as a senior researcher and is part of the unit of Human-Centred AI in the department of mobility and systems. His research focuses on application of AI in autonomous (and partially autonomous) systems with focus on research into using AI and models of driver behaviour for mitigating safety critical events as well as safe use of AI in such use cases. He researches on, and coordinates, a number of projects related to the above within Sweden such as VInnova funded IntoxEye (REF: 2023-02606) and I-AIMS (REF: 2023-03068) and carries out research on the SAFEXPLAIN project.