On 23 September 2025, members of the SAFEXPLAIN consortium joined forces with fellow European projects ULTIMATE and EdgeAI-Trust for the event “Trustworthy AI in Safety-Critical Systems: Overcoming Adoption Barriers.” The gathering brought together experts from academia, industry, and regulatory bodies to explore the key challenges and opportunities in deploying trustworthy AI across safety-critical domains such as automotive, rail, and space.

The event opened with a welcome by Irune Agirre, Dependability and Cybersecurity Methods Team Leader from IKERLAN, who introduced the project coordinators organizing the event and recalled their shared mission: to reconcile AI techniques with safety and ethical requirements to improve explainability, traceability and runtime assurance for critical embedded systems.

Project coordinators Jaume Abella (SAFEXPLAIN), Michel Barreteau (ULTIMATE) and Mohammed Abuteir (EdgeAI-Trust) briefly presented their projects before entering into a panel discussion moderated by Irune.

The panel reflected on the broader AI landscape, including rising regulatory pressure for trustworthy AI, the gap between certification and AI-based systems and the challenges of trust, ethics, transparency and robustness in high-stakes applications.

Interwoven into the breaks were moments for exchange, networking and poster sessions. Four posters showcased novel methods and early results from

- CAPSULIA – Encapsulation Of AI-Based Solutions To Accelerate Their Adoption

- EdgeAI-Trust – Decentralized Edge Intelligence: Advancing Trust, Safety, and Sustainability in Europe

- SMARTY: Scalable and Quantum Resilient Heterogenenous Edge Computing enabling TrustworthyAI

- PQC4eMRTD: Post-Quantum Cryptography for electronic Machine-Readable Travel Documents

The audience then broke into two tracks, one dedicated to SAFEXPLAIN and EdgeAI-Trust and another dedicated to ULTIMATE, allowing for a deeper look into each project’s results and tools.

During the morning session SAFEXPLAIN partners presented:

- Safety-relevant AI development process and software architecture (Javier Fernández, IKERLAN, Giuseppe Nicosia exida development)

- AI explainability solutions to build safety-relvant AI-based software architectures (Thanh Bui, RISE)

- Platform support to enable safety-relevant AI-based systems (Enrico Mezzetti, BSC)

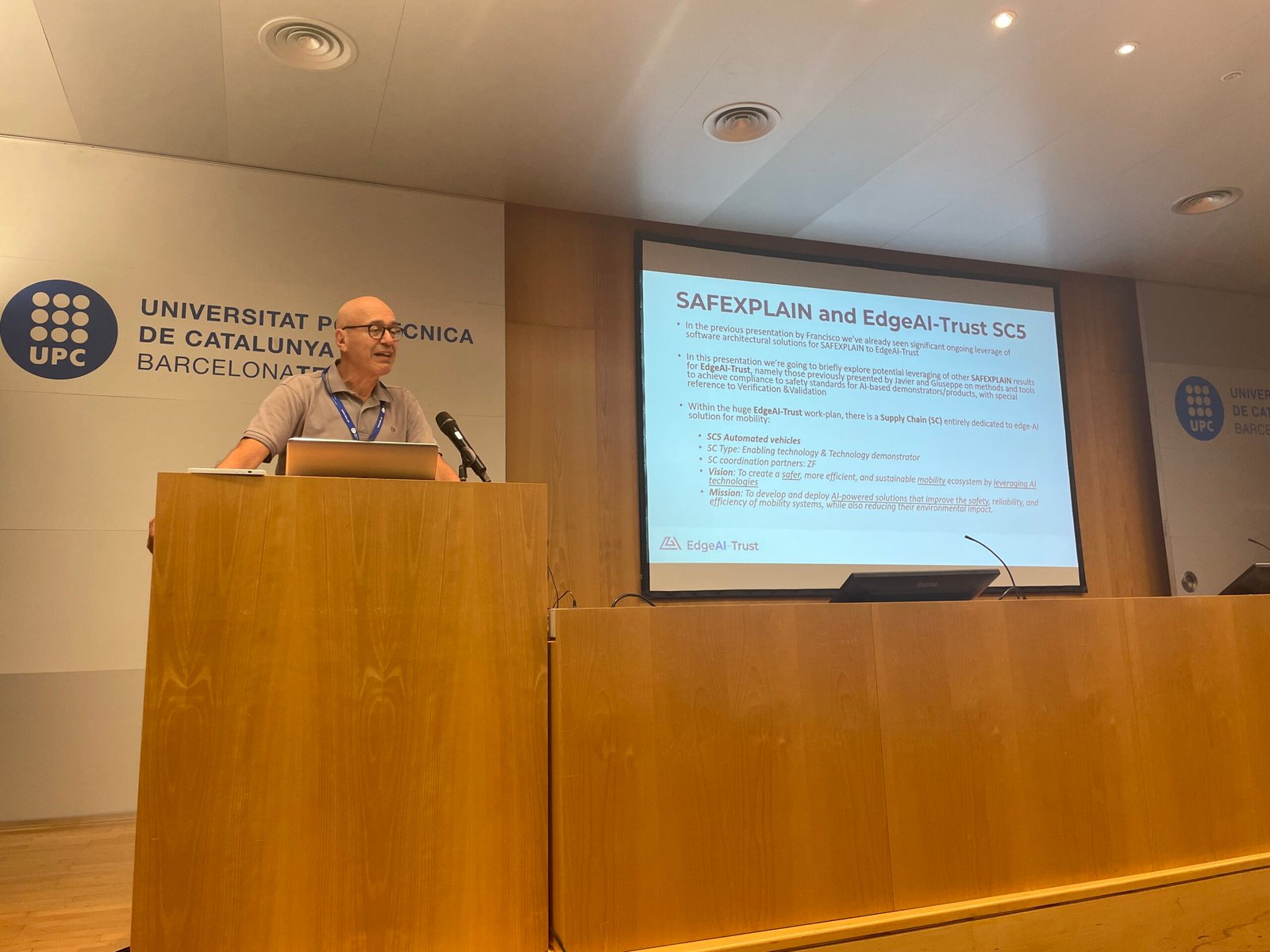

EdgeAI-Trust representatives Francisco J. Cazorla (BSC) and Carlo Donzella (exida development) shared insights into the work developed in the EdgeAI-Trust project.

As part of its morning track, ULTIMATE shared its End-to-end Trustworthy AI (incuding ethics) approach and the different trustworthy AI activities along the hybrid AI lifecycle about a Satellite Use Case (see agenda for speaker details).

In the afternoon session, ULTIMATE also presented its remaining use cases, namely the “Robotic workshop” and the “Industrial mobile manupulators” cases. The related trustworthy AI activities regarding Design & Development, Algorithm evaluation and Execution under operational conditions steps have also shown innovative hybrid AI solutions considering the accuracy, reliability, explainability, robustness and ethics trustworthy AI criteria.

The SAFEXPLAIN afternoon session focused on AI-based safety critical system demos, with presentations by:

The session closed on a positive note with project coordinators providing a forward look on AI in safety critical systems, including the need for explainability, ethics, human in the loop options and the new challenges and opportunities posed by GenAI.

While the “Trustworthy AI in Safety-Critical Systems” event marked the conclusion of the SAFEXPLAIN and ULTIMATE projects’ official timeline, it also underscored how the results of all projects will live on through community, adoption and continued technological development. With the momentum and visibility gained, SAFEXPLAIN and ULTIMATE have set a strong foundation for the next generation of trustworthy AI in critical systems while showing their complementarities.