News

High interest in SAFEXPLAIN tech @ Gate4SPICE INTACS event

The SAFEXPLAIN keynote at the INTACS event “Optimal Performance of Modern Development: Automotive SPICE® Fusion with Intelligent Systems and Agile Frameworks” hosted by SEITech Solutions GmbH as part of the Gate4SPICE was extremely well-received by attendees. The...

SAFEXPLAIN deliverables now available!

Twelve deliverables reporting on the work undertaken by the project have been published in the results section of the website. The SAFEXPLAIN deliverables provide key details about the project and how it is progressing. The following deliverables have been created for...

SAFEXPLAIN takes part in 1st intacs® certified ML for Automotive SPICE® (pilot) training

SAFEXPLAIN partner, exida development provided invaluable contributions to the two days of pilot training for the intacs® certified machine learning (ML) automotive SPICE® training.

Integrating the Railway Case Study into the Reference Safety Architecture Pattern

Within the SAFEXPLAIN (SE) project, project partner, Ikerlan, leads the railway case study (CS), which is specifically centred on Automatic Train Operation (ATO). This article highlights how this CS is integrated into the reference safety architecture, building on the...

BSC’s Francisco J. Cazorla Delivers Keynote at prestigious 36th ECRTS Conference

BSC Research on real-time embedded systems takes center stage at premier European conference Francisco J. Cazorla from BSC delivers keynote at the 36th ECRTS The 36th Euromicro Conference on Real-Time Systems is a major international conference showcasing the latest...

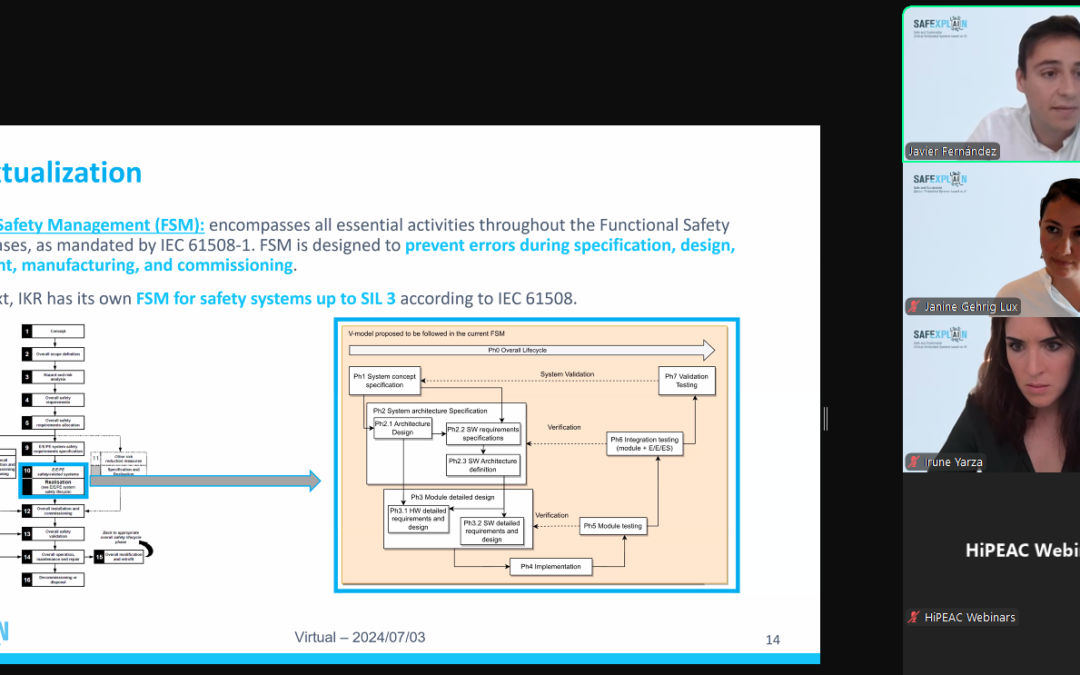

IKERLAN Webinar Provides Key Insights into AI-Functional Safety Management

Dr Javier Fernández Muñoz from IKERLAN contextualizes the current state of AI and Functional Safety Management On 4 July 2024, speakers from IKERLAN shared an in-depth look into the SAFEXPLAIN project developments in AI-Functional Safety Management (FSM) methodology....

SAFEXPLAIN joins EU AI Community with Digital Booth @ ADR Exhibition

The 2024 European Convergence Summit, hosted by the AI, Data and Robotics Association ecosystem, was held online on 19 June 2024 and brought together influential players from AI, Data and Robotics to discuss the impact of these technologies on society. The summit...

SAFEXPLAIN invited talk, workshop and panel participations at 28th Ada-Europe conference

Coordinator Jaume Abella introduces Irune Yarza as part of SAFEAI workshop co-located within 28th Ada-Europe conference The 28th Ada-Europe International Conference on Reliable Software Technologies (AEiC 2024) was held in Barcelona, Spain from 11-14 June 2024. This...

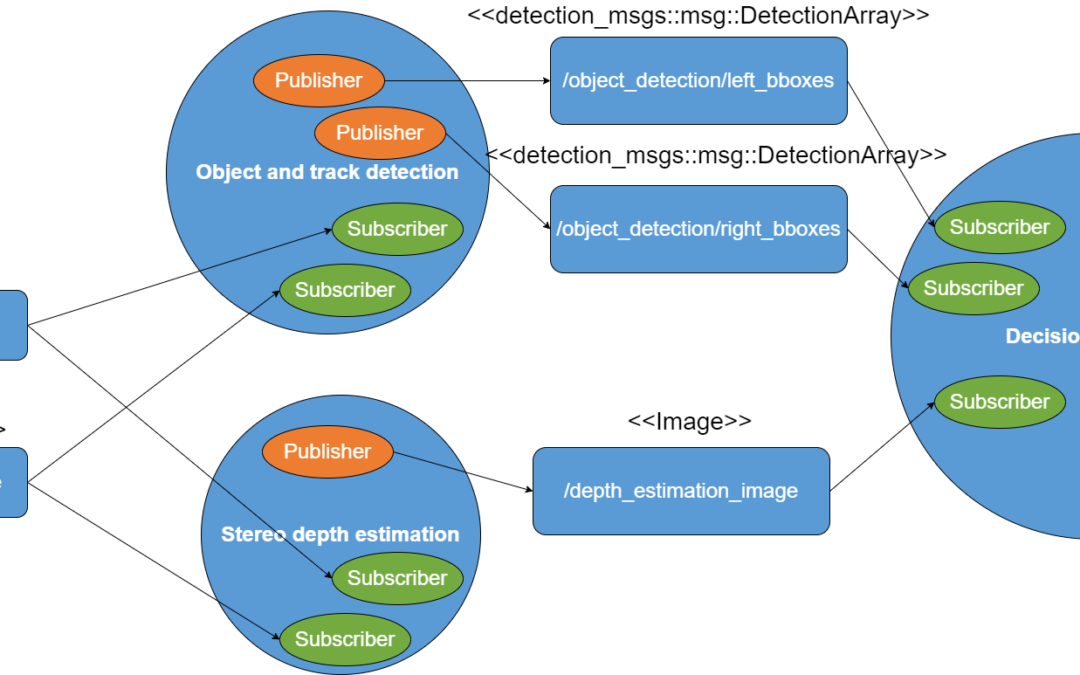

Developing safe and explainable AI for autonomous driving: Automotive case study

NAVINFO has been working to validate the real-world applicability of their work by deploying an autonomous driving system on an embedded compute platform. Two videos showcase the performance of their driving agent in relevant safety scenarios.

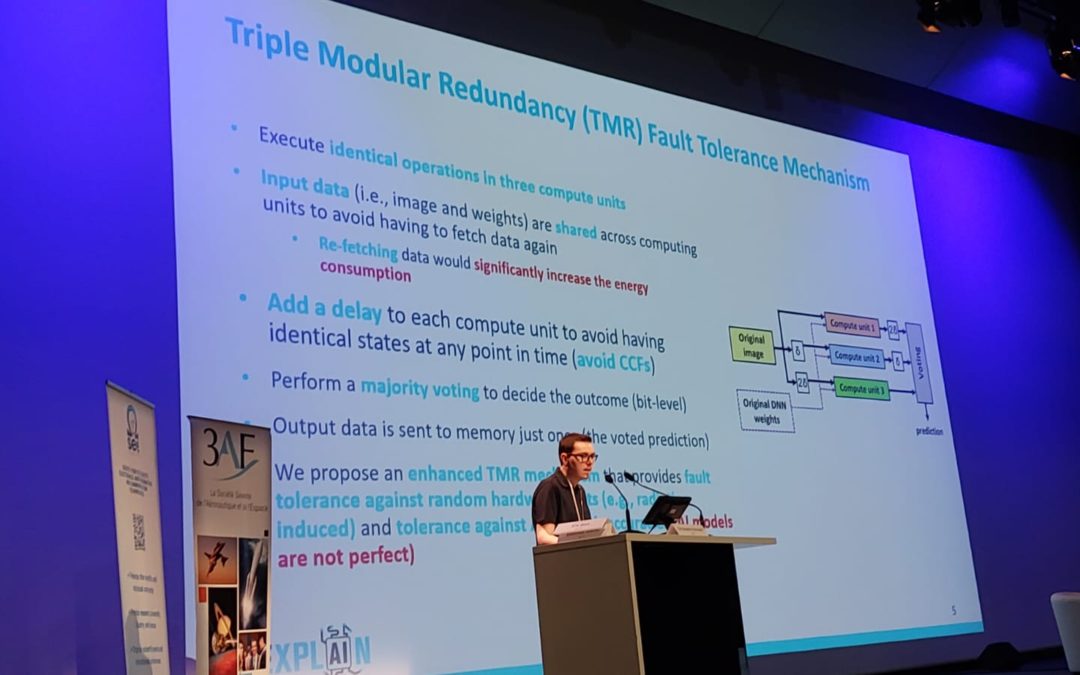

SAFEXPLAIN shares strategies for diverse redundancy in ML/AI Critical Systems session at ERTS ’24

Martí Caro from the Barcelona Supercomputing Center presents at the 2024 Embedded Real Time System Congress Barcelona Supercomputing Center researcher Martí Caro presented "Software-Only Semantic Diverse Redundancy for High-Integrity AI-Based Functionalities" at the...