by Janine Marie Gehrig Lux | Dec 20, 2023 | Uncategorized

Exploiting the computational power of complex hardware platforms is opening the door to more extensive and accurate Artificial Intellgence (AI) and Deep Learning (DL) solutions. Performance-eager AI-based solutions are a common enabler of increasingly complex and...

by Janine Marie Gehrig Lux | Dec 14, 2023 | Uncategorized

In an important checkpoint for the SAFEXPLAIN project, the project consortium met with an Industrial Advisory Board comprised of eight influential industry actors on 24 November 2023. At just over the one year point, this meeting sought to present the project´s...

by Janine Marie Gehrig Lux | Nov 20, 2023 | Uncategorized

Horizon Europe supports nine initiatives to boost solid and trustworthy AI across Europe Nine projects funded under Horizon Europe call HORIZON-CL4-2021-HUMAN-01-01 will pave the way for the widespread acceptance of Artificial Intelligence (AI) across Europe....

by Janine Marie Gehrig Lux | Oct 23, 2023 | Uncategorized

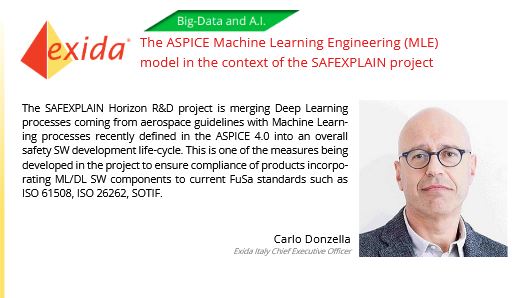

EXIDA partner, Carlo Donzella, presents an initial comparison between ASPICE vs SAFEXPLAIN models at the 2023 EXIDA Automotive Symposium SAFEXPLAIN partner, exida, presented the SAFEXPLAIN project in the exida-hosted Automotive Symposium on18 October 2023 in...

by Janine Marie Gehrig Lux | Oct 18, 2023 | Uncategorized

IKERLAN partner, Jon Perez-Cerrolazo presents @ TÜV Rheinland International Symposium 2023 on AI and Safety survey prepared by the consortium. The 2023 TÜV Rheinland International Symposium was held in Boston, USA from 16-17 October. This two day event brought...

Recent Comments