Exida in SAFEXPLAIN: Extending Functional Safety Compliance to Machine Learning Applications, NOW

Exida is part of two technical pillars that are associated with two major work-packages (WP) of the project: WP2- Safety Assessment and WP4 -Platforms and Toolset Support. The intermediate results of which are presented in this text.

Celebrating Women and Girls in Science Day with advice for young scientists

We´re celebraiting the 9th Anniversary of #FEBRUARY11 Global Movement with a look into the women in science and technology in the project.

The SAFEXPLAIN projects counts with the participation of many women in science who are driving the project´s success. See what advice they have for young scientists.

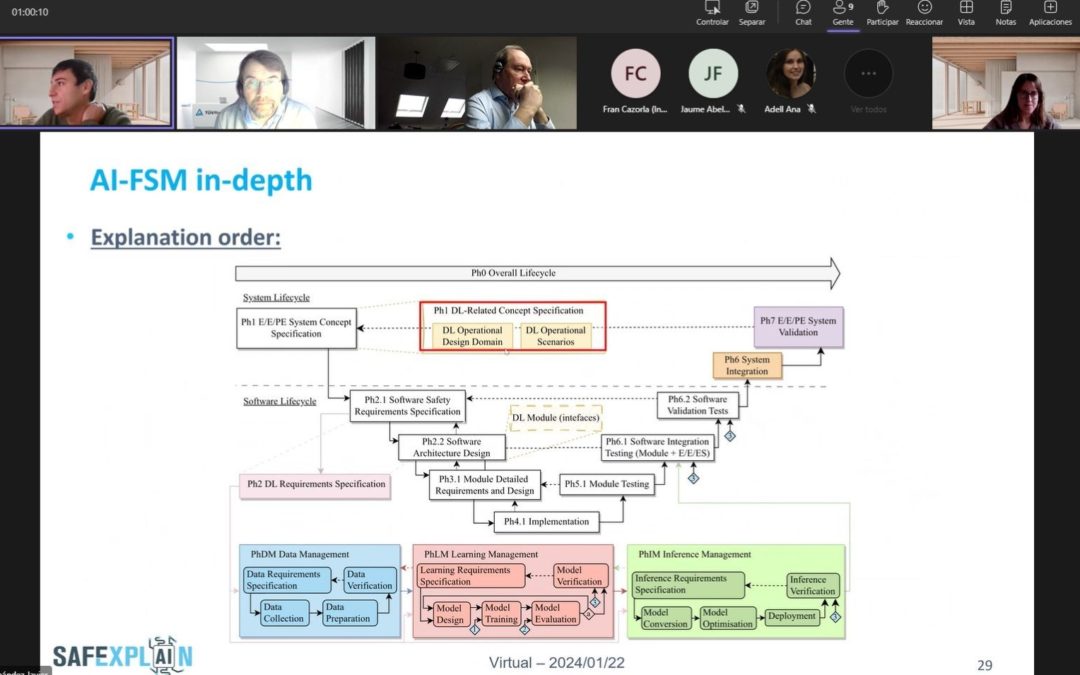

Integrating AI into Functional Safety Management

SAFEXPLAIN is developing an AI-Functional Safety Management methodology that guides the development process, maps the traditional lifecycle of safety-critical systems with the AI lifecycle, and addreses their interactions. AI-FSM extends widely adopted FSM methodologies that stem from functional safety standards to the the specific needs of Deep Learning architecture specifications, data, learning, and inference management, as well as appropriate testing steps. The SAFEXPLAIN-developed AI-FSM considers recommendations from IEC 61508 [5], EASA [6], ISO/IEC 5460 [3], AMLAS [7] and ASPICE 4.0 [8], among others.

Certification bodies weigh-in on SAFEXPLAIN functional safety management methodologies integrating AI

SAFEXPLAIN partners from IKERLAN and the Barcelona Supercomputing Center met with TÜV Rheinland experts on 22 January 2024 to share the project´s AI-Functional Safety Management (AI-FSM) methodology. This meeting provided an important opportunity for the project to present its work to an important player in safety certification.

Recent Comments